Chapter 6 Inferential statistics: Take 1

This chapter and the next are going to introduce inferential statistics in R, but from two different perspectives. This chapter will focus on doing all of the calculations involved in statistical inference “by hand” (where here we really mean using R to implement the formulas/ideas). The next chapter will show you how to perform many of the same calculations using functions native to R. It is valuable knowing how to do both so that you can check one or the other or possibly write your own type of statistical test one day!

Both chapters will be organized according to the type of variables we will be analyzing. So, for example, we’ll start with statistical inference for a single categorical variable. One can (and probably should) read the “by hand” section of this chapter in tandem with the corresponding native function section of the next chapter. This chapter will include a brief summary of the ideas involved in statistical inference, but the next will just go straight into the details.

The code chunk below attaches all packages we’ll use in this chapter and sets a seed which basically determines a starting point for some of the randomization we’ll employ in the chapter. Setting a seed insures that the same random samples will be drawn every time the code is run.

library(tidyverse)

library(knitr)

library(openintro)

library(infer)

library(patchwork)

set.seed(1187)6.1 Basics of statistical inference

We will learn about three primary types of statistical inference in this class, each aimed at answering different types of questions. To illustrate the types of questions we endeavor to answer, think about analyzing the political landscape in America. You could ask:

What proportion of Americans identify as politically independent?

Are there more politically independent people in Montana than in Idaho?

How what proportion of voters will identify as politically independent in the future?

The first question is asking for an estimate of a population parameter (the true proportion of independents in America). The second question, on the other hand, is asking about a difference between two population parameters. The third requires a prediction based on past data. These types of questions are certainly related (for instance, you could answer the second question by estimating each of the two population parameters), but the most common tools for answering them are slightly different.

Our main tool for answering the first question above is a confidence interval, which uses an estimate of the amount of variable we expect between samples to provided a range of plausible values for the population parameter. The tool we use to answer the second question is called a hypothesis test; these test assess how likely or unlikely your sample is if there were no differences. Hypothesis tests involve understanding the amount of variability we expect to see between samples. We access this this quantity by understanding the distribution of possible samples. The probability distribution associated to all possible samples is called a sampling distribution, which we discuss more in the next section.

6.1.1 Sampling distributions

Taking a simple random sample is, of course, a random process. Assigning a number like the sample proportion or sample average to this sample, then, naturally turns sample statistics into random variables. Since we can think of each sample statistic as a random variable, each sample statistic has an associated probability distribution called a sampling distribution! The overall population under consideration determines the sampling distribution and we almost never have access to population-level data, so you may wonder how or if we have any hope of understanding the sampling distribution of our statistic of interest. In particular, we hope to understand the shape, the center, and the spread of the sampling distribution. There are two primary was of doing this: through simulation and through theory. This class touches briefly on the simulation approach, but focuses mostly on the theoretical approach to accessing sampling distributions.

Before moving on to describe these approaches, we need a quick definition that helps us differentiate from talking about a population distribution and talking about a sampling distribution. The standard error, abbreviated SE, of a sampling distribution is simply its standard deviation.

One of the miracles of statistics (Note: it is not a real miracle since it is a mathematical theorem, but it amazes the author to this day.) is the Central Limit Theorem. We will state the Central Limit Theorem in a few different ways throughout this chapter, but each version essentially says the same thing. Namely, if you have a sufficiently large (caution: this means different things in different contexts) and high quality sample (IE a sample with independent observations, usually obtained through random sampling), the sampling distribution will be symmetric and unimodal with its center at the true population parameter. Moreover, as the sample size increases, the standard error decreases. In other words, all sample statistics will cluster around the true population parameter and observing a sample stat above the population parameter is just as likely as observing one below. The fact that the standard error decreases as the sample size increases codifies our intuition that “large samples are usually better,” since it implies that a sample statistic calculated from a larger sample is more likely to be close to the true population parameter than one calculated from a smaller sample.

Before moving on to discuss how we use sampling distributions, let’s try to make this a bit more concrete by simulating a sampling distribution using a technique called bootstrapping which takes a large, random sample, and resamples it many times with replacement to simulate the process of taking many different samples. We’ll use the gss2010 data set from the openintro package which records the highest educational attainment (among other things) of 2044 randomly sampled Americans in 2010.

Suppose we’re interested in knowing more about the proportion of all Americans who do not have a high school diploma. The gss2010 data set serves as our sample and we can use the sample proportion \(\hat{p}\), read as “p hat”, as a point estimate for the true population proportion \(p\).

## [1] 0.1492172So our sample indicates that in 2010, about 15% of the American population had less than a high school diploma. But this is only a sample of 2044 Americans. If we took another sample of 2044 people and calculated the same proportion, how different do we expect that proportion to be? We can simulate this by drawing from our original sample, but doing so with replacement.

# Take sample

sample2 <- sample( gss2010$degree, size = 2044, replace = TRUE)

#Find proportion of high school dropouts

pHat2 <- sum(sample2 == "LT HIGH SCHOOL") / length(sample2)

pHat2## [1] 0.1364971This sample proportion is different, but not by too much. Of course, 2 different samples don’t provide enough evidence to make any conclusions about the amount of variability we should expect among all possible samples. To estimate the standard error, we need to simulate many more samples. We’ll use the following code, using commands from the infer package, to do this. The code below takes 5000 samples of size 2044 from our original sample again with replacement, changes the degree column in each sample to a binary outcome (no HS diploma, or other), counts the number of people in each sample without a high school diploma, then calculates the corresponding sample proportion.

simulated_sampling_distr <- gss2010 %>%

rep_sample_n(size = 2044, reps = 5000, replace = TRUE) %>%

mutate( degree = if_else(degree == "LT HIGH SCHOOL", "LT HIGH SCHOOL", "other")) %>%

count(degree) %>%

mutate(p_hat = n / sum(n)) %>%

filter( degree == "LT HIGH SCHOOL")

head(simulated_sampling_distr)## # A tibble: 6 × 4

## # Groups: replicate [6]

## replicate degree n p_hat

## <int> <chr> <int> <dbl>

## 1 1 LT HIGH SCHOOL 322 0.158

## 2 2 LT HIGH SCHOOL 315 0.154

## 3 3 LT HIGH SCHOOL 317 0.155

## 4 4 LT HIGH SCHOOL 310 0.152

## 5 5 LT HIGH SCHOOL 314 0.154

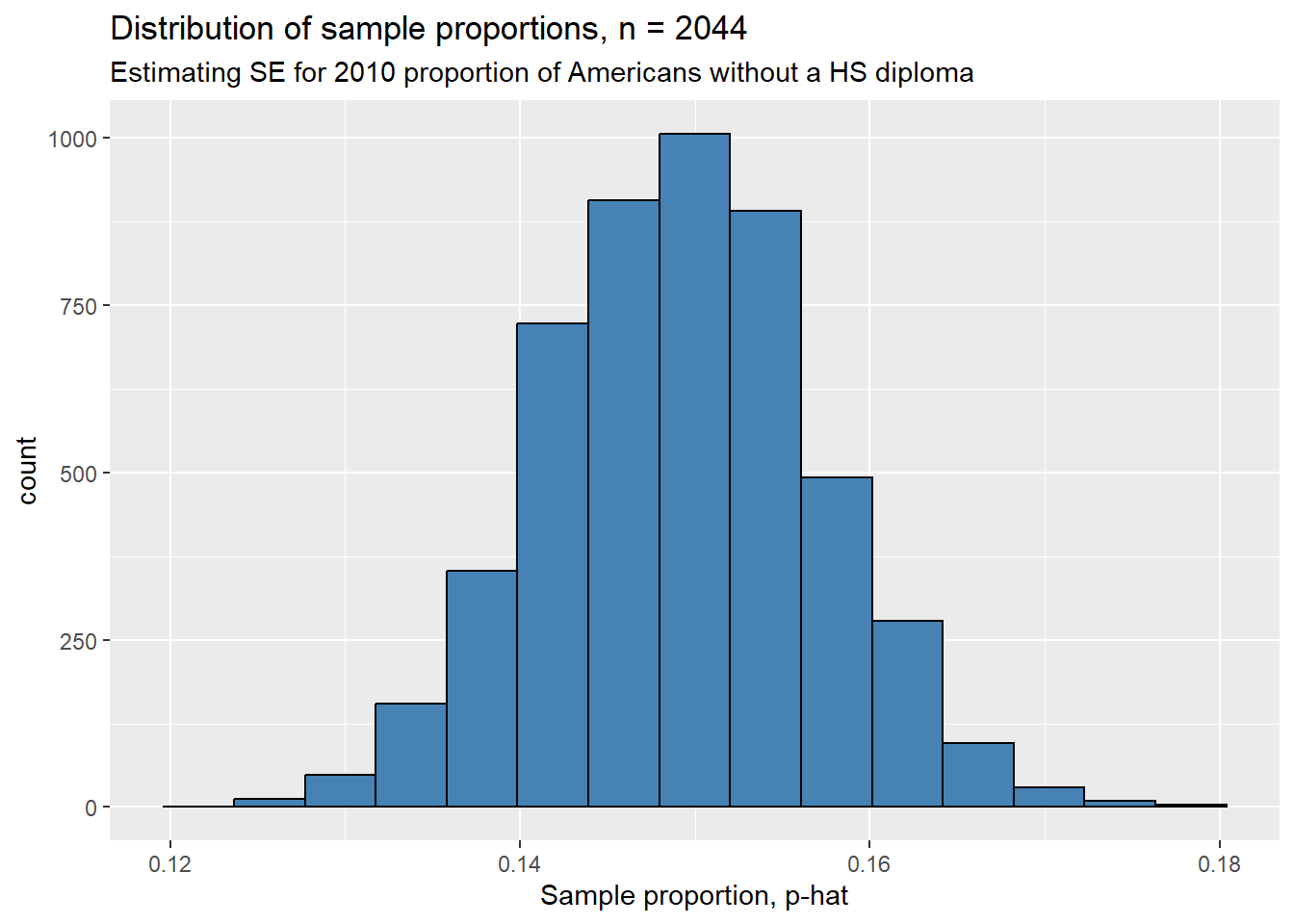

## 6 6 LT HIGH SCHOOL 311 0.152We’ve simulated a sampling distribution! Let’s look at a histogram of this simulated distribution to assess it’s shape and spread. Remember, the Central Limit Theorem says this distribution should likely by symmetric and unimodal.

ggplot(simulated_sampling_distr, aes(x = p_hat)) +

geom_histogram(bins = 15, color = "black", fill = "steelblue") +

labs(title = "Distribution of sample proportions, n = 2044",

subtitle = "Estimating SE for 2010 proportion of Americans without a HS diploma",

x = "Sample proportion, p-hat")

Voila! Our sampling distribution does seem to be symmetric and unimodal. Where precisely is the center?

## [1] 0.1492041What, approximately, is the standard error? Simply the standard deviation of the column p_hat!

## [1] 0.007862322This means if we were to repeatedly sample 2044 Americans and record the proportion of people with less than a college degree, we should expect to see a difference of about 0.8% between the proportions on average. As will see in the next two sections, understanding the standard error is the key to performing inferential statistics. However, before we move on to using sampling distributions, let’s simulate the Central Limit Theorem’s other main claim: the standard error decreases as the sample size increases.

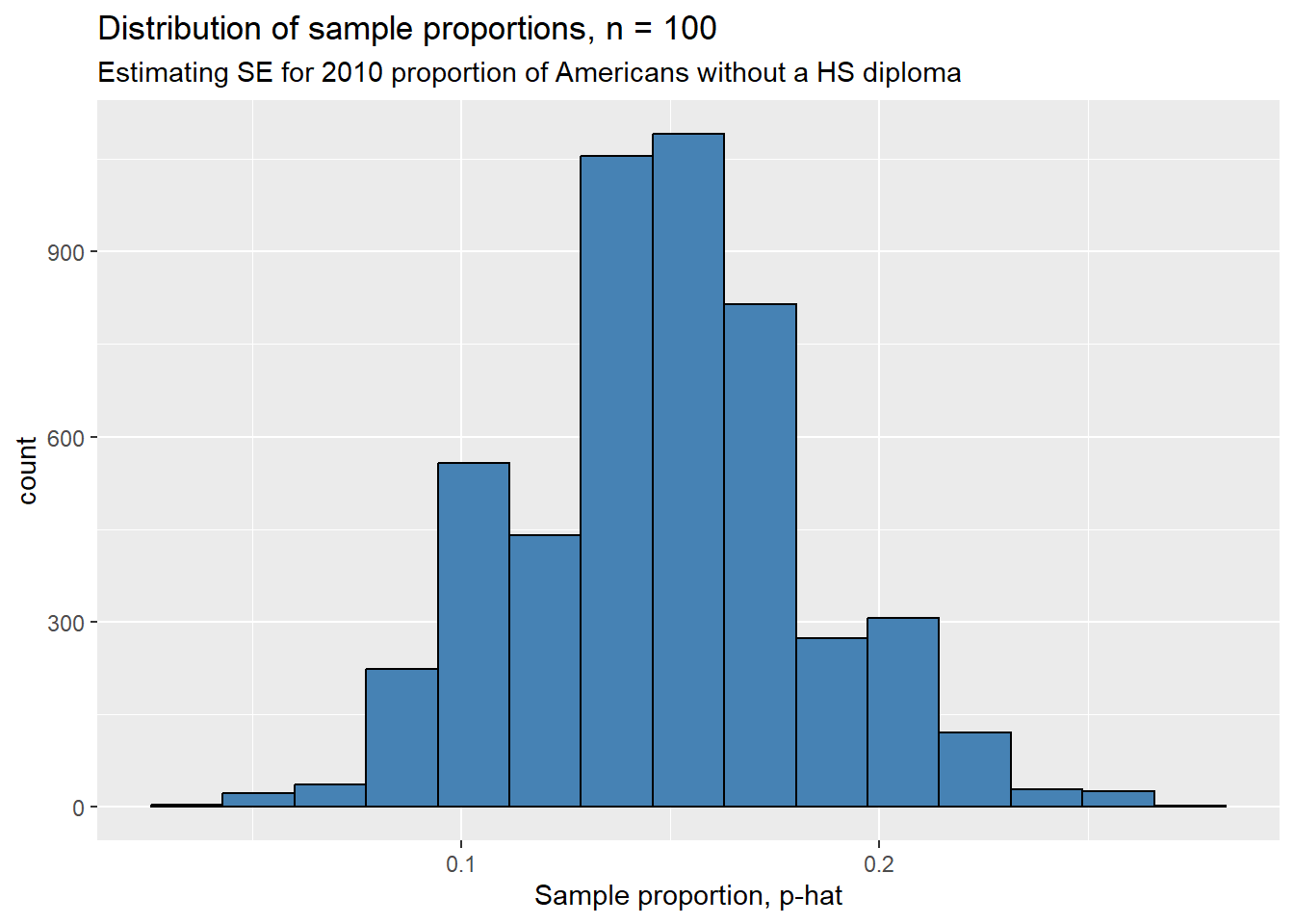

To show this, we’ll go the opposite direction and take smaller samples from the gss2010 data set. We should see the standard error of this simulated distribution increase. The code below simulates a sampling distribution with sample size \(n = 100\).

sampling_dist2 <- gss2010 %>%

rep_sample_n(size = 100, reps = 5000, replace = TRUE) %>%

mutate( degree = if_else(degree == "LT HIGH SCHOOL", "LT HIGH SCHOOL", "other")) %>%

count(degree) %>%

mutate(p_hat = n / sum(n)) %>%

filter( degree == "LT HIGH SCHOOL")

head(sampling_dist2)## # A tibble: 6 × 4

## # Groups: replicate [6]

## replicate degree n p_hat

## <int> <chr> <int> <dbl>

## 1 1 LT HIGH SCHOOL 8 0.08

## 2 2 LT HIGH SCHOOL 20 0.2

## 3 3 LT HIGH SCHOOL 21 0.21

## 4 4 LT HIGH SCHOOL 15 0.15

## 5 5 LT HIGH SCHOOL 15 0.15

## 6 6 LT HIGH SCHOOL 20 0.2Note that just looking at the first 6 sample proportions we can already see more variability than our first distribution. Let’s visualize this one.

ggplot(sampling_dist2, aes(x = p_hat)) +

geom_histogram(bins = 15, color = "black", fill = "steelblue") +

labs(title = "Distribution of sample proportions, n = 100",

subtitle = "Estimating SE for 2010 proportion of Americans without a HS diploma",

x = "Sample proportion, p-hat")

Notice that the center is about the same as our first sampling distribution, but our second is more spread out as we claimed above! To check these claims, let’s look at the average sample proportion and the estimated standard error.

## [1] 0.14884## [1] 0.0353421The average sample proportions are super close, but the standard error of our distribution with \(n = 100\) is about 5 times as large as the standard error of the distribution with \(n = 2044\). This fits our intuition: small samples are less reliable because they have more variability around the population parameter.

Now that we have a feel for sampling distributions, let’s use these simulated sampling distributions to make some inferences.

6.1.2 Confidence intervals

Recall that a confidence interval provides a range of plausible values for a population parameter. For example, we will use our point estimates from the previous section to estimate the true proportion of Americans without a high school diploma. The width of a confidence level depends on two quantities:

the confidence level: a measure of the likelihood that our interval contains to true population parameter. Typical confidence levels are 90%, 95%, and 99%.

the standard error: the standard deviation of the sampling distribution.

Your confidence level determines a critical value \(CV\). If your confidence level is \(CL\), then \(CL\)% of all sample proportions will fall within \(CV\) standard deviations of the true population parameter within the sampling distribution. Thus, as your confidence level increase, the critical value increases, and so your confidence interval ends up getting wider. This makes sense: to be more confident in your estimate, your range of plausible values better be “larger.”

Now we can describe the general formula for confidence intervals:

\[ \text{point estimate} \pm CV \cdot SE\]

In the sections to come, the details of your point estimates along with the procedure to calculate the standard error and critical value will change, but the general formula for a confidence interval is always the same. With this in mind, it becomes much more important to be able to correctly interpret confidence intervals. Before closing out this section, let’s use our simulated sampling distribution from the previous section to estimate the proportion of Americans without a high school diploma in 2010. In particular, we’ll calculate a 95% confidence interval.

Looking back at the histogram of simulated_sampling_distr, we see that it is approximately normally distributed. Recall that roughly 95% of all observations in a normal distribution fall within two standard deviations from the center. Thus, as a rough approximation, our critical value is \(CV = 2\).

We’ve also already found our point estimate, the sample proportion of Americans without a high school diploma pHat, and our standard error, the standard deviation of the sampling distribution.

## [1] 0.1492172## [1] 0.007862322Using the formula, \(\text{point estimate} \pm CV \cdot SE\), our 95% confidence interval is

## [1] 0.1334926 0.1649419In other words, with 95% confidence, we estimate that between 13.3% and 16.5% of all Americans in 2010 did not have a high school diploma.

6.1.3 Hypothesis tests

We use confidence intervals to estimate population parameters, but sometimes we only want to figure out if a population parameter differs from some value. This situation arises when you ask questions like “Will a majority of American Republicans vote for Donald Trump in the 2024 presidential election primary?” or “Do more than 10% of Americans fail to graduate high school?” To answer these types of questions statistically, we perform hypothesis tests. Every hypothesis test has two competing claims:

\(H_0\), the null hypothesis. This typically represents the status quo, that there is no difference, that there is no effect, or that the variables independent.

\(H_a\): the research or alternative hypothesis. This represents, well, your research question. There is a difference, or an effect, or the variables in question are dependent.

We always express the null and alternative hypothesis as statements involving population parameters. For instance, if \(p\) represents the proportion of Americans without a high school diploma in 2010, we would turn the question “Do more than 10% of Americans fail to graduate high school?” into a hypothesis test as

\(H_0 : p = .1\) (or \(p \leq .1\))

\(H_a : p > .1\)

We use sample data to evaluate hypothesis tests and do so by determining how likely or unlikely our sample data would be if the null hypothesis were true. This is called the \(p\)-value of a sample. More precisely, the \(p\)-value of a sample is the probability of observing a sample at least as a favorable for the alternative hypothesis as your own, assuming the null hypothesis is true.

Let’s think about what a \(p\)-value tells is. If you have a relatively small \(p\)-value, say .01, then there would be a 1% chance of observing the data you have (or data that’s even more extreme) if the null were true. We have two competing possibilities here: either the null hypothesis is true and we have witnessed a rare event simply by chance or the alternative hypothesis is true. Unfortunately (and this is especially if you only have a single sample) there’s no way to tell which world you’re living in! As an attempt to remedy this dilemma, we must set a significance level before performing our hypothesis test. The most common significance levels are \(\alpha = .01, .05, \text{ and } .1\). You use the significance level \(\alpha\) to evaluate the hypothesis test. In particular, if

the \(p\)-value is less than \(\alpha\), reject the null and accept the alternative. Or

the \(p\)-value is greater than or equal to \(\alpha\), you fail to reject the null.

Note that a hypothesis test is like a jury trial: a jury either finds the defendant guilty or not guilty, they never deem a defendant innocent. Similarly, we can never prove the null hypothesis; instead, we can only collect enough evidence to believe that it is false. With this in mind, we think of the significance level \(\alpha\) as the probability of a false positive in a hypothesis test. In other words, if the null were true and you could repeatedly perform the same hypothesis test, you would end up rejecting the null \(\alpha\)% of the time.

In general, there are three broad types of hypothesis tests: one-tailed upper, one-tailed lower, and two-tailed tests. The type of test determines how you calculate the \(p\)-value of your sample because it determines what counts as “more favorable for the alternative hypothesis.”

To illustrate this idea, let’s pretend we’re performing a hypothesis test for a proportion with

\[ H_0: p = .5 \]

and suppose we took a sample with size \(n = 200\) and \(\hat{p} = .55\). Below we’ll look at the three types of alternative hypotheses and visualize the corresponding \(p\)-values for our imaginary sample.

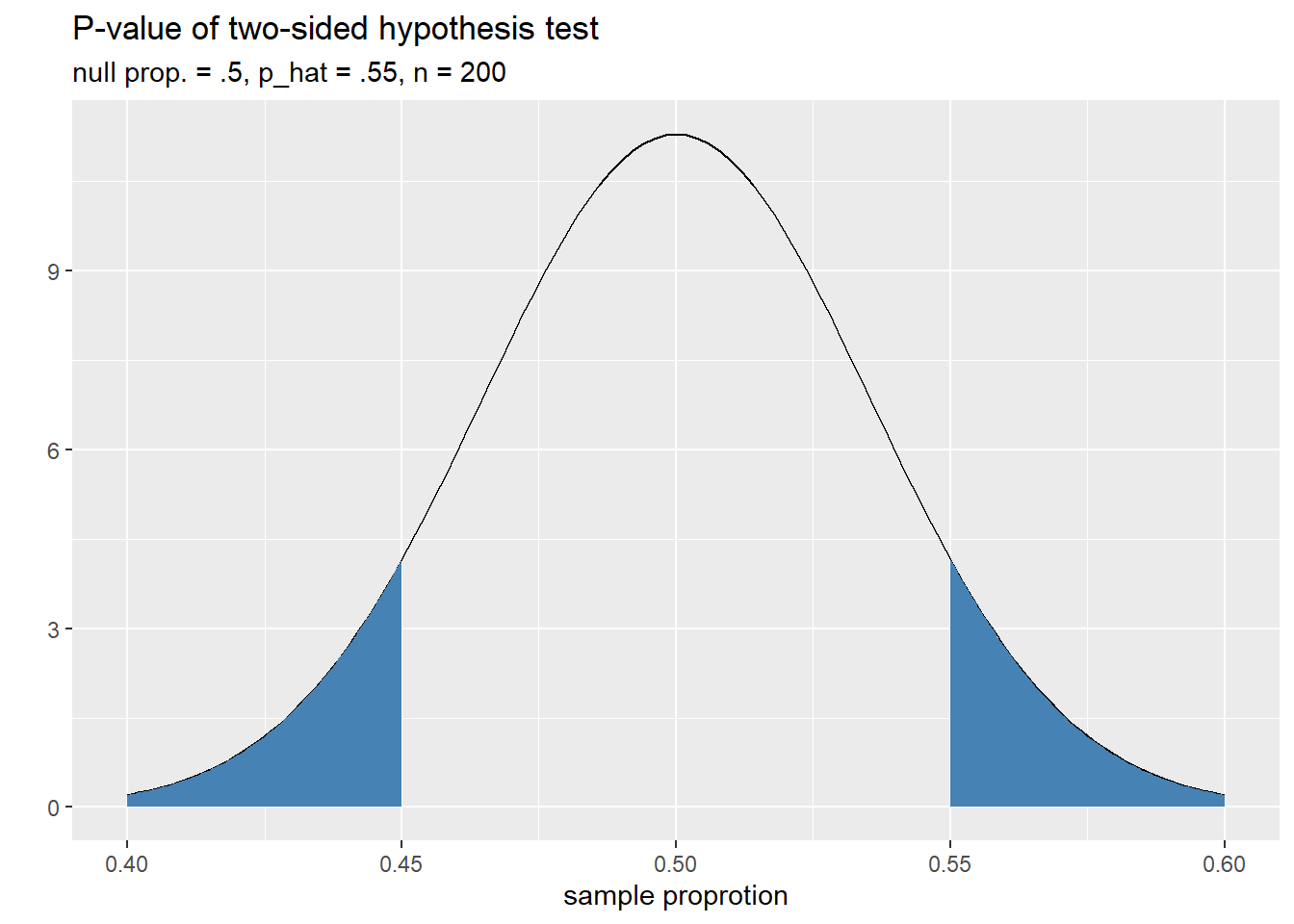

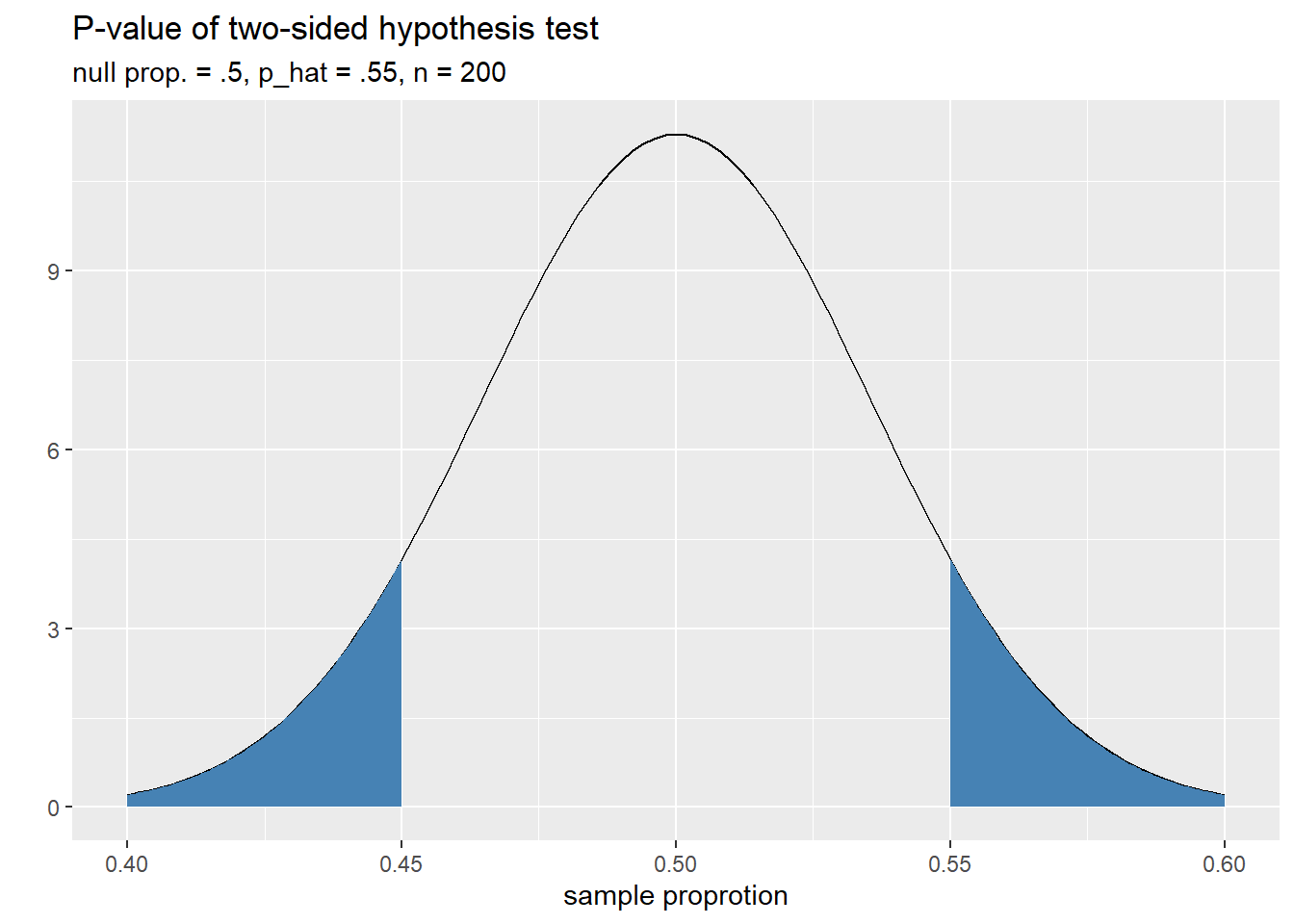

- Two-sided hypothesis test. In this case, we’re simply checking to see if the population parameter deviates from the null value. For our example: \[ H_a: p \neq .5 \]

Samples that are at least as favorable for \(H_a\) as our own come in two flavors: samples with a proportion of at least .55 and those with a proportion of at most .45. Notice that the samples with proportions of at most .45 really are just as favorable as those with proportions of at least .55 when we’re checking for a difference because they are equally as far away from the null proportion of .5. Thus, the \(p\)-value of a two-sided hypothesis tests will be a two-tailed probability. The plot below helps visualize this \(p\)-value as an area in the sampling distribution. We have hidden the plotting code here to prioritize the concepts.

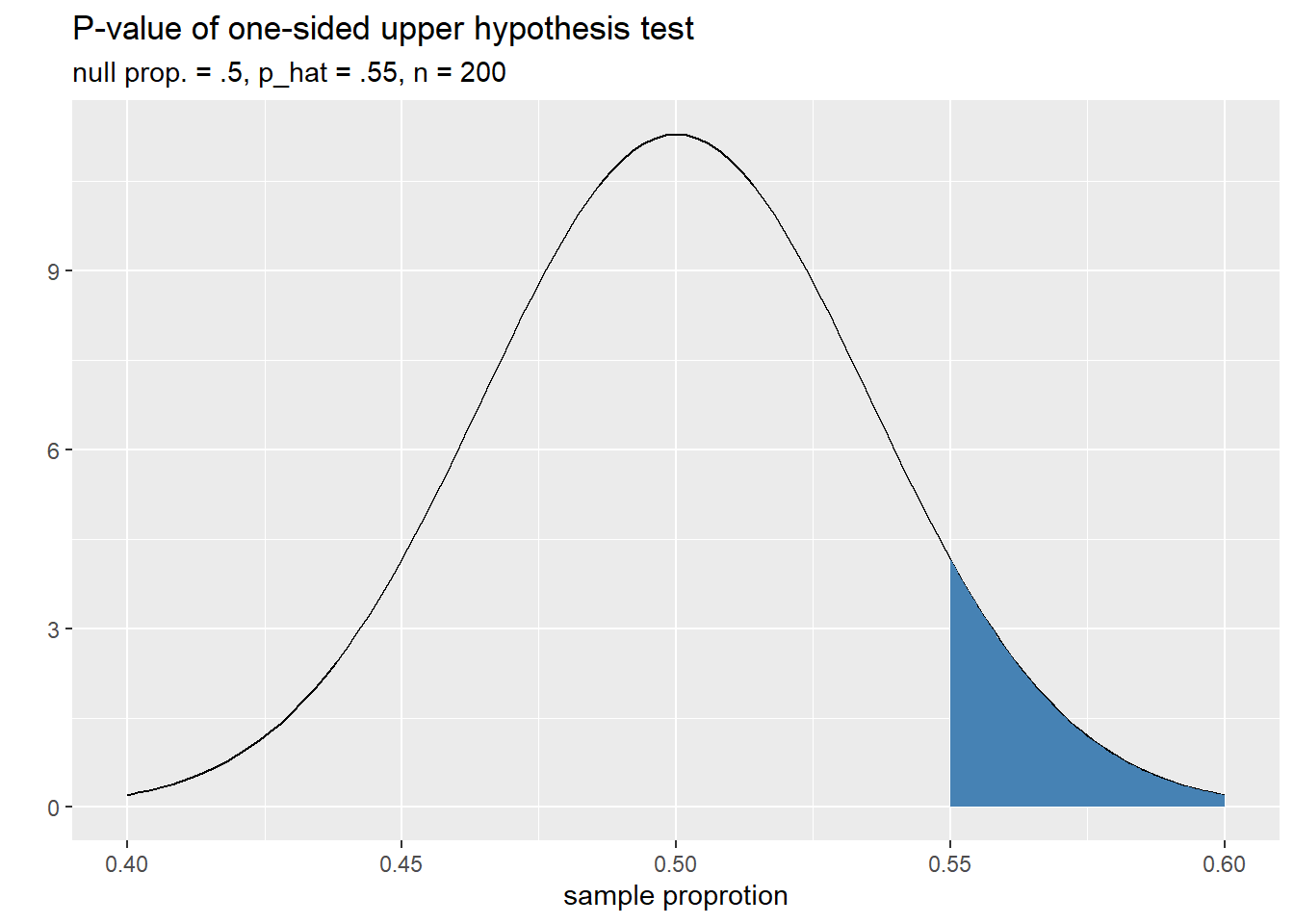

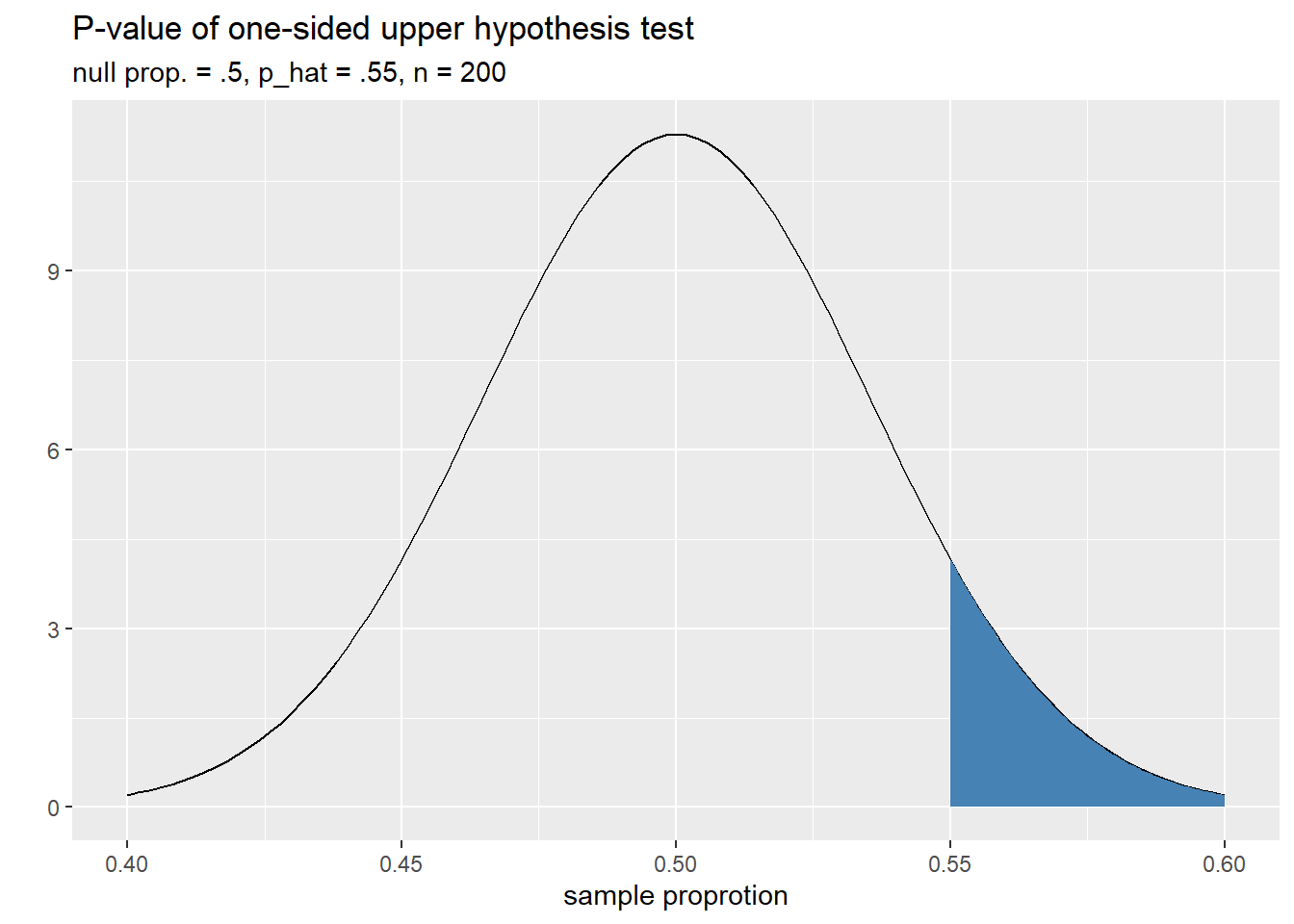

- One-sided upper hypothesis test: In this case, we’re simply checking to see if the population parameter is greater than the null value. For our example:

\[ H_a: p > .5 \]

Samples that are at least as favorable for \(H_a\) as our own only one flavor this time: samples with a proportion of at least .55. Thus the \(p\)-value for this hypothesis test is an upper-tail area. The plot below helps us visualize this.

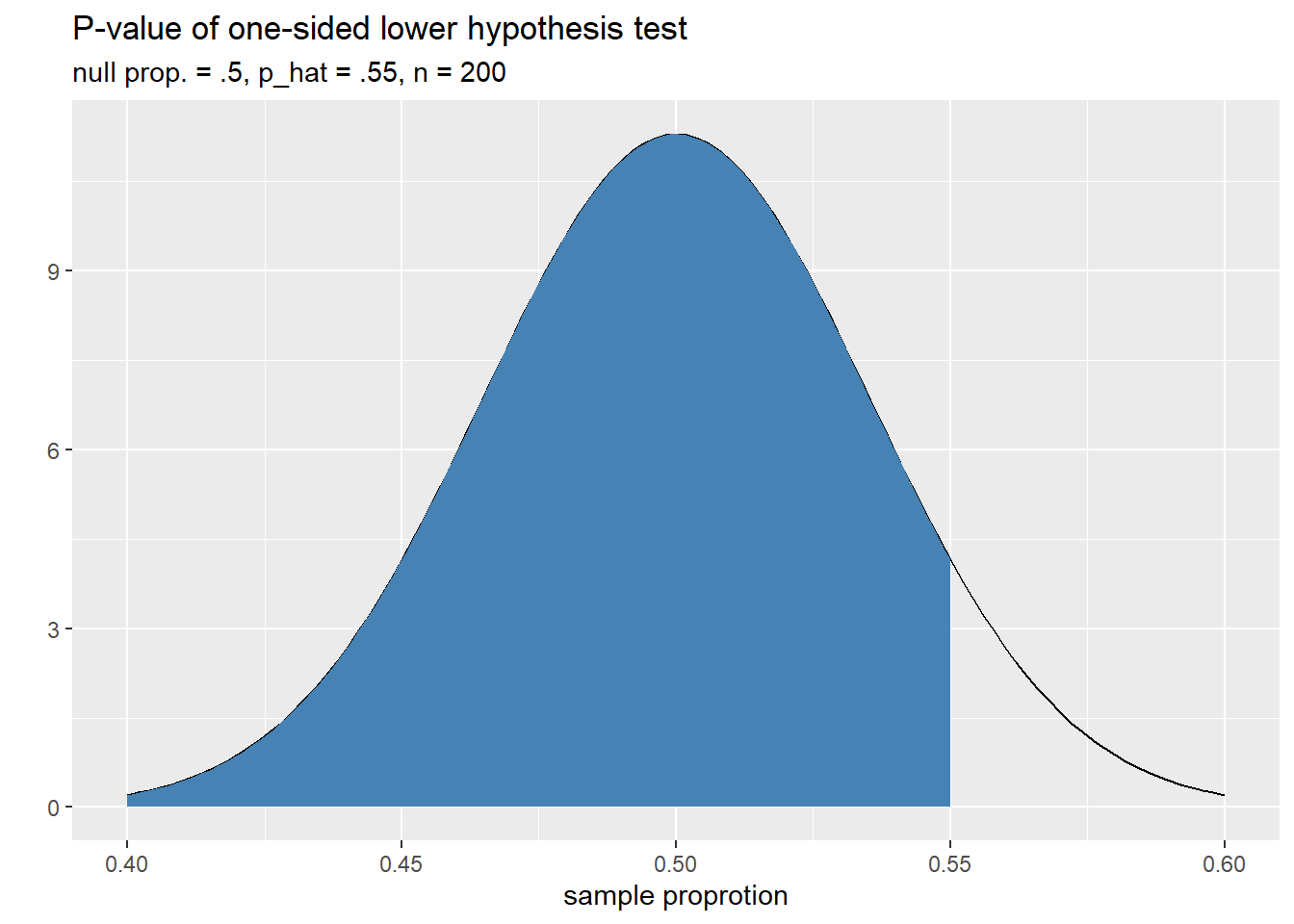

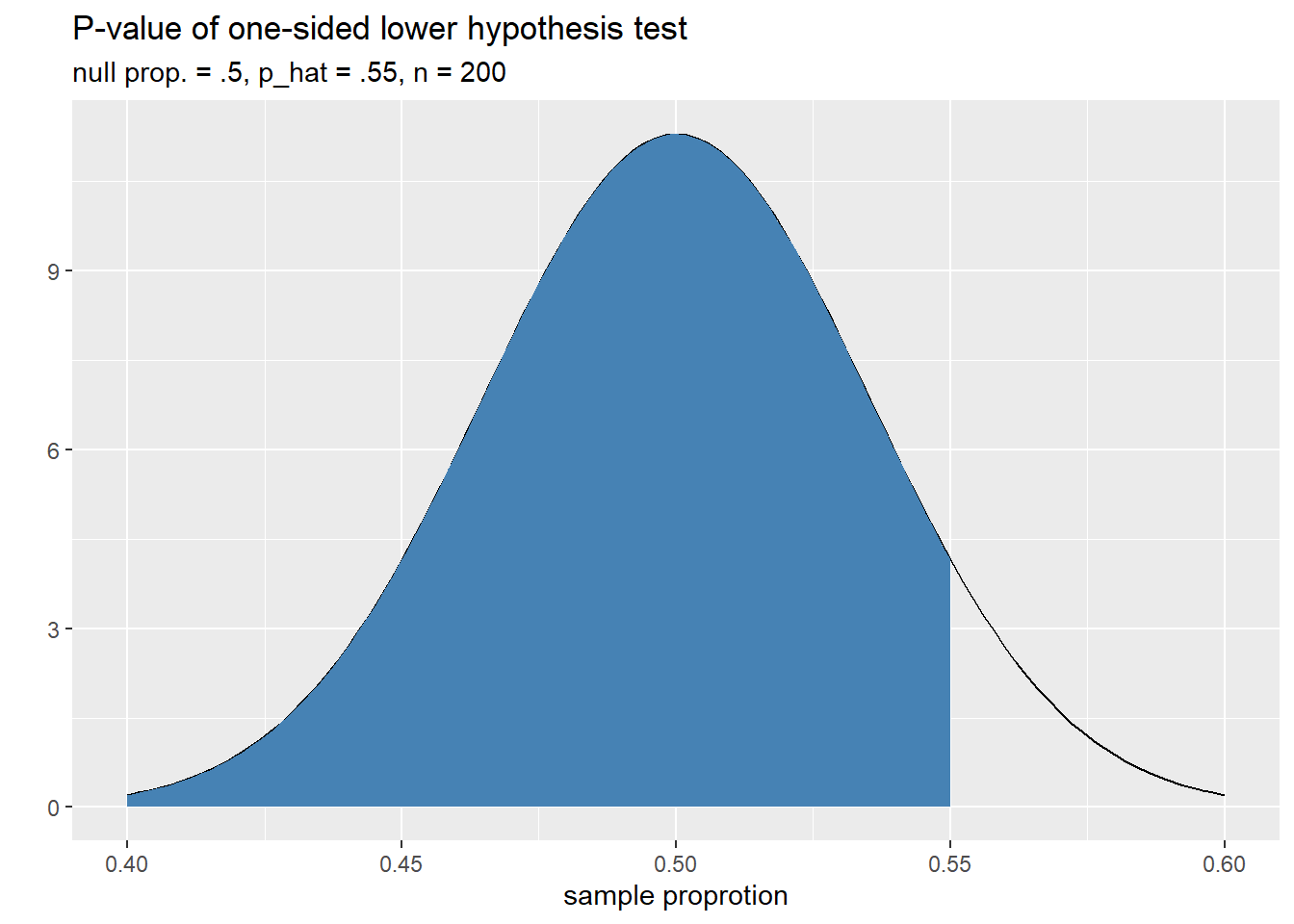

- One-sided lower hypothesis test: In this case, we’re simply checking to see if the population parameter is less than the null value. For our example:

\[ H_a: p > .5 \]

Samples that are at least as favorable for \(H_a\) as our own only one flavor: samples with a proportion of at most .55. Thus the \(p\)-value for this hypothesis test is a lower-tail area in the sampling distribution. The plot below helps us visualize this.

Our \(p\)-value is large because our sample proportion is above the null value when we thought it was going to be below. We should revise our alternative hypothesis in this case.

Let’s finish this section using the high school diploma question we started this chapter with. In particular, in 2010 did more than 10% of Americans fail to graduate high school? As a hypothesis test:

\(H_0: p = .1\)

\(H_a: p > .1\)

Let’s set our significance level to \(\alpha .05\). To evaluate the hypothesis test need to calculate a \(p\)-value. To accomplish this, we need to be able to assess the likelihood of our sample assuming the null hypothesis is true. Here we’re still using the gss2010 data for our sample; recall the recall the sample proportion is \(\hat{p} \approx 0.149\) and the sample size is \(n = 2044\).

In the next section we’ll learn some theory that describes the sampling distribution, but we will simulate a sampling distribution under the null hypothesis.

# generates results from 5000 binomial experiments w/ probability of

# success = .1 and sample size of n = 2044, returns sample proportion.

null_dist <- tibble(p_hat = rbinom(5000, 2044, .1)/2044)

# center of distribution

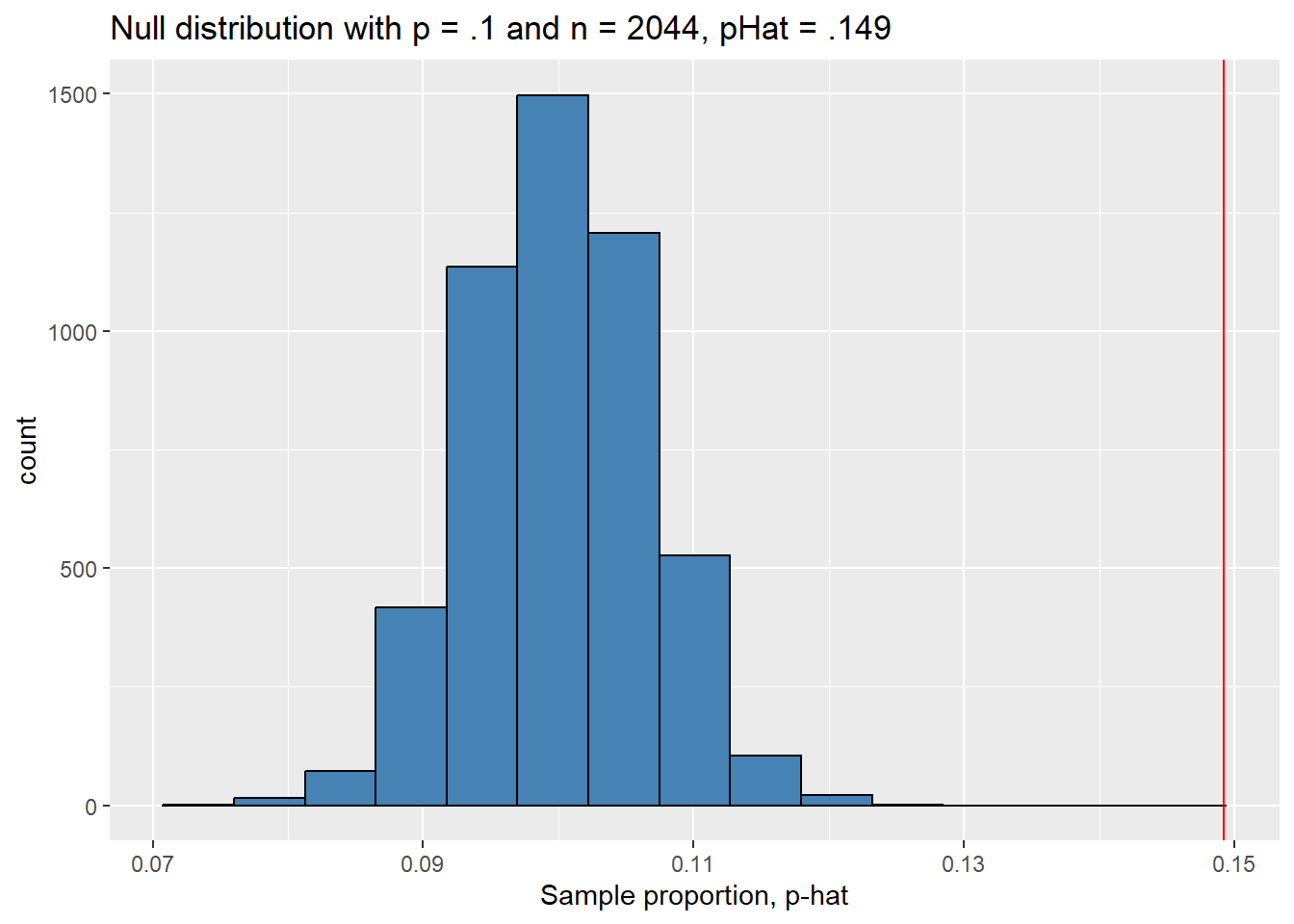

mean(null_dist$p_hat)## [1] 0.1000021## [1] 0.006583714Let’s visualize the null distribution and our sample proportion \(\hat{p}\)’s location in the nul distribution.

ggplot(null_dist, aes(x = p_hat)) +

geom_histogram(bins = 15, color = "black", fill = "steelblue") +

geom_vline(xintercept = pHat, color = "red") +

labs(title = "Null distribution with p = .1 and n = 2044, pHat = .149",

x = "Sample proportion, p-hat")

As we’d expect the null sampling distribution is approximately normal. The plot above indicates that our sample proportion \(\hat{p}\) would be quite unlikely if the null hypothesis were true. To estimate exactly how unlikely, let’s estimate the \(p\)-value of our sample.

# count the number of simulated samples with proportions at least as large as

# our actual sample proportion, divide by number of samples in simulated dist.

pValue <- sum(null_dist$p_hat >= pHat ) / length(null_dist$p_hat)

pValue## [1] 0This means that if 10% of Americans didn’t have high school diplomas in 2010, the probability of finding a sample of 2044 Americans of which 14.9% or more do not have a high school diploma is essentially 0.

Because our \(p\)-value is less than the significance level \(\alpha = .05\), we reject the null hypothesis and accept the alternative. In other words, our data provide compelling evidence to believe that more than 10% of Americans in 2010 did not have a high school diploma.

We finish this section by noting that we spent a lot of time on this example and a lot of time/space on explanation here. Once you get the hang of this, you will perform and interpret hypothesis tests quickly and efficiently. Moreover, most of what we’ll do in this class will not require simulating sampling distributions. For more on this, read on!

6.2 Analyzing categorical variables

Now we will move in to using theoretical sampling distributions to calculate confidence intervals and perform hypothesis tests. The key almost all of the theoretical sampling distributions is the central limit theorem, which states that, if you have a large enough sample of independent observations, the sampling distribution will be approximately normal. Moreover, the standard error decreases as your sample size increase. In the following sections we will see a few different incarnations of the central limit theorem; the big picture will stay the same while the fine details change as we analyze different types of data.

This section focus on analyzing categorical variables. When making inferences about categorical variables it is best to analyze proportions because these generalize to populations much better than counts. In particular we want to look at the proportion of responses in a sample that take on a specific value of the categorical variable. We’ve seen this already, of course (see the last section for an example). The following subsections describe how to make inferences using a single sample proportion, two sample proportions, or (in some sense) many sample proportions.

6.2.1 Single sample proportion

When analyzing a single categorical variable, the most basic parameter to make an inference about is the population proportion \(p\), the proportion of the population taking on a particular value of the categorical variable. For example, the proportion of American adults in 2010 that do not have a high school diploma. We use sample proportions \(\hat{p}\) to make inferences about \(p\) and under the right circumstances can apply the Central Limit Theorem to understand the distribution of sample proportions.

Sample statistic: sample proportion \(\hat{p}\)

Population parameter: population proportion \(p\)

The Central Limit Theorem for a Single-sample Proportion states that if you

have a sample of \(n\) independent observations (hard to guarantee, but safe to assume if n < 10% of the populatino and you employ random sampling) and

and at least 10 (expected) success and failures (ie \(\hat{p}n \geq 10\) and \((1- \hat{p})n \geq 10\) )

Then the sampling distribution of \(\hat{p}\) is approximately normal with a mean of \(p\) and standard error \(SE = \sqrt{ \frac{p(1-p)}{n}}\). In symbols: \[ \hat{p} \sim N \left( \text{mean} = p, SE =\sqrt{ \frac{p(1-p)}{n}} \right) \]

Moreover, if \(p\) is unknown (as it usually is!), \[ SE \approx \sqrt{ \frac{\hat{p}(1-\hat{p})}{n}}\] In other words, you can approximate the standard error using the sample proportion.

In this situation, the hypotheses you need to meet in order to apply the Central Limit Theorem are typically easy to satisfy, which means we can use the CLT to make inferences in all of our favorite ways!

6.2.1.1 Confidence intervals

Suppose you have a sample of \(n\) observations that meets the conditions for applying the Central Limit Theorem. Then the distribution of sample proportions is approximately normal, centered at the true population proportion with a standard error of

\[ SE \approx \sqrt{ \frac{\hat{p}(1-\hat{p})}{n}}.\]

We can use these facts to calculate a confidence interval to estimate the population proportion. Recall that every confidence interval has the form

\[ \text{point estimate} \pm CV \cdot SE\]

where \(CV\) is the critical value determined by the confidence level. The CLT provides the standard error and our sample gives the point estimate, so we only need to worry about the critical value. Recall that if your confidence level is \(CL\), then \(CL\)% of all sample proportions will fall within \(CV\) standard deviations of the true population parameter within the sampling distribution. The sampling distribution is normal by the Central Limit theorem, so we can use the qnorm(...) function to find precise critical values.

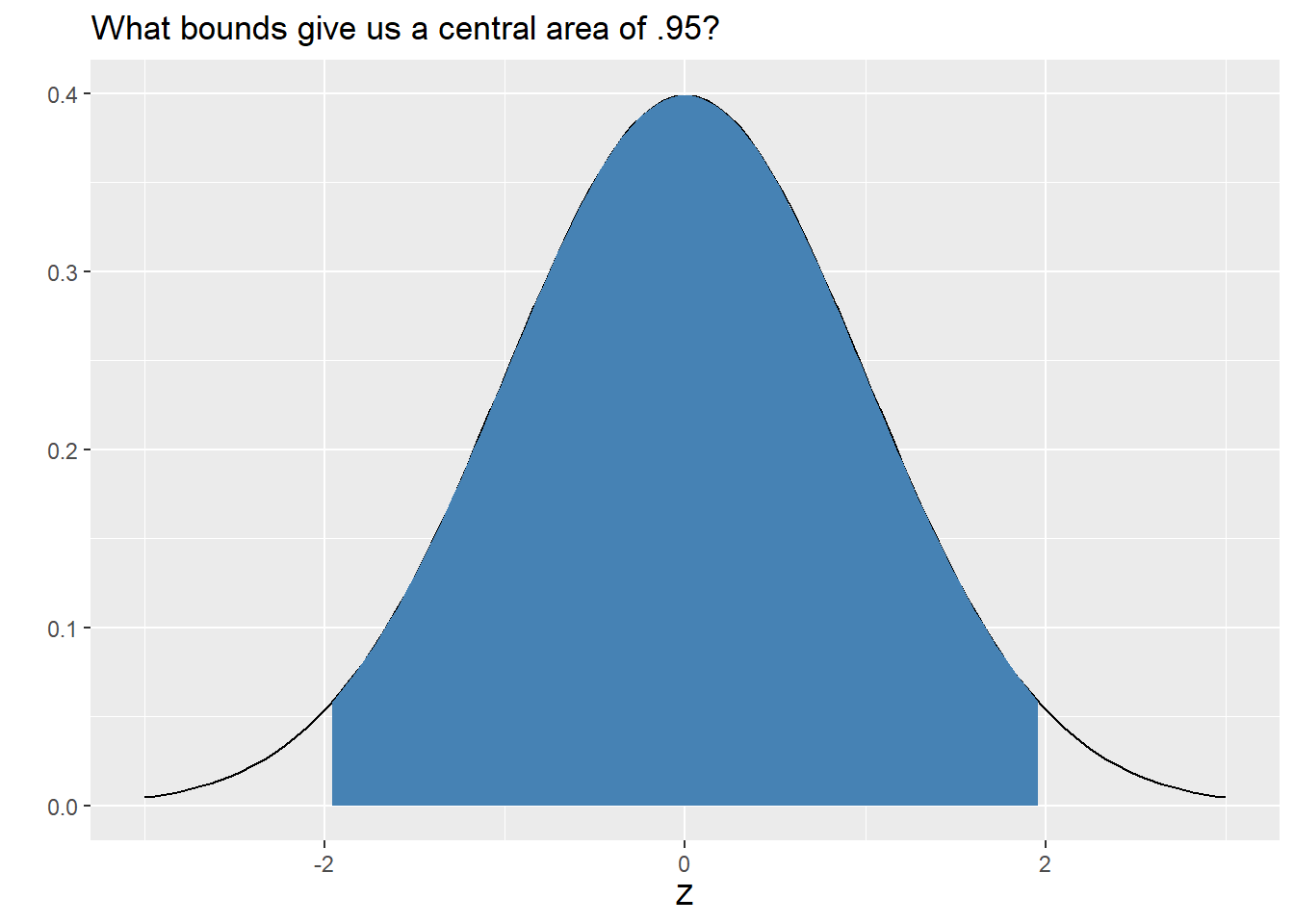

As an example, let’s find the critical value for a 95% confidence interval. We want to find the value \(z^\ast\) so that 95% of all sample proportions fall within \(z^\ast\) standard deviations of the population proportion. In other words, we need to find \(z^\ast\) so that \(P ( |Z| \leq z^\ast ) = .95\). The graph below helps visualize the setup.

We can see that the bounds are almost \(\pm2\), but not quite. If the central area is .95, then the tail area is .05. The upper and lower tails are symmetric, so the upper tail area is .025. Thus, to find our critical value, we simply evaluate

## [1] 1.959964Let’s use the same idea to find the critical values for some common confidence levels.

cvTable <- tibble( conf.level = c(.9, .95, .98, .99),

crit.val = qnorm( (1 - conf.level)/2, lower.tail =F))

cvTable## # A tibble: 4 × 2

## conf.level crit.val

## <dbl> <dbl>

## 1 0.9 1.64

## 2 0.95 1.96

## 3 0.98 2.33

## 4 0.99 2.58With that taken care of, we can calculate confidence intervals for any proportion we want at any confidence level! In particular, an \(\alpha\)% confidence level for a single proportion is

\[\hat{p} \pm z^\ast_\alpha \cdot \sqrt{\frac{\hat{p}(1-\hat{p})}{n}}. \]

Note there that this really is the same formula as above just with the details of the CLT filled in.

Let’s close this off with an example, recreating what we did in the last section to estimate the proportion of American adults without high school degrees. As a reminder, this is from the gss2010 data set from the openintro package.

The code chunk below goes through the calculation of a 95% confidence interval for the proportion in question.

# components of CI

pHat <- sum( gss2010$degree == "LT HIGH SCHOOL") / length(gss2010$degree)

SE <- sqrt(pHat*(1-pHat)/length(gss2010$degree))

zCrit <- qnorm( (1 - .95)/2, lower.tail = FALSE)

# CI

pHat + c(-1,1)*zCrit*SE## [1] 0.1337709 0.1646636So with 95% confidence, between 13.4% and 16.5% of all Americans in 2010 did not have a high school diploma. Compare this to the simulated example from the previous section: the confidence intervals are almost identical! You can think of this as the central limit theorem verifying simulations or simulations verifying the central limit theorem. In their case, it’s cool!

Next up, we’ll learn how to perform hypothesis tests using theoretical sampling distributions.

6.2.1.2 Hypothesis testing

Suppose you have a sample of \(n\) observations and you’d like to perform a hypothesis test using this sample. Recall that you assume the null hypothesis is true when performing a hypothesis test. Thus, in order to apply the Central Limit Theorem, we need to make sure our sample is sufficiently large under the null hypothesis.

More explicitly, suppose the null proportion is \(p_0\). If

your sample has \(n\) independent observations and

your sample has at least 10 expected success and failures, ie \(n p_0 \geq 10\) and \(n (1- p_0) \geq 10\)

then the distribution of sample proportions is approximately normal:

\[ \hat{p} \sim N\left( \text{mean} = p_0 , SE = \sqrt{\frac{p_0(1-p_0)}{n}} \right). \]

We can use use this sampling distribution to calculate \(p\)-values thus execute hypothesis tests. We will show how to do this first with the toy examples from the last section which demonstrate the three types of hypothesis tests then one last time using the familiar gss2010 data.

For the next three examples, suppose we have a sample of \(n = 200\) independent observations and a sample proportion of \(\hat{p} = .55\). We will perform three hypothesis tests to see if our sample provides sufficient evidence to conclude that the true population proportion is greater than .5, less than .5, or different from .5 using a siginificance level of \(\alpha = .05\).

In all cases, note that \(.5*200 = 100 \geq 10\), so the Central Limit Theorem applies. Furthermore

\[ \hat{p} \sim N\left(\text{mean} = .5, SE = \sqrt{\frac{.5\cdot .5}{200}} \right) \]

Two-sided hypothesis test: Our hypothesis test is

\[\begin{array}{cc} H_0: p = .5 \\ H_a: p \neq .5 \end{array} \]

and the image below helps us visualize the \(p\)-value of our sample with \(\hat{p} = .55\)

The upper and lower tails of the \(p\)-value are symmetric about the mean, so we can calculate one of them and double it.

pNull <- .5 SE <- sqrt(pNull*(1 - pNull)/200) pHat <- .55 pVal <- 2*pnorm(.55, mean = pNull, sd = SE, lower.tail = F) pVal## [1] 0.1572992With a \(p\)-value of approximately .16 we fail to reject the null hypothesis. The data do not provide compelling evidence of a difference.

One-Sided Upper Hypothesis test: Our hypothesis test is

\[\begin{array}{cc} H_0: p = .5 \\ H_a: p > .5 \end{array} \]

and the image below helps us visualize the \(p\)-value of our sample with \(\hat{p} = .55\)

The \(p\)-value is the shaded upper tail area and can be calculated as follows.

pNull <- .5 SE <- sqrt(pNull*(1 - pNull)/200) pHat <- .55 pVal <- pnorm(.55, mean = pNull, sd = SE, lower.tail = F) pVal## [1] 0.0786496With a \(p\)-value of approximately .08 we fail to reject the null hypothesis at a significance level of \(\alpha =.05\) . The data do not provide compelling evidence that the true proportion is greater than .5.

One-Sided Lower Hypothesis test: Our hypothesis test is

\[\begin{array}{cc} H_0: p = .5 \\ H_a: p < .5 \end{array} \]

and the image below helps us visualize the \(p\)-value of our sample with \(\hat{p} = .55\)

The \(p\)-value is the shaded llower tail area and can be calculated as follows.

pNull <- .5 SE <- sqrt(pNull*(1 - pNull)/200) pHat <- .55 pVal <- pnorm(.55, mean = pNull, sd = SE) pVal## [1] 0.9213504No suprise here. With a \(p\)-value of approximately .92 we fail to reject the null hypothesis at a significance level of \(\alpha =.05\) . The data do not provide compelling evidence that the true proportion is less than .5.

We close this section using the gss2010 data to perform a hypothesis test to see if more than 10% of Americans in 2010 did not have a high school diploma. Again we’ll use a significance level of \(\alpha = .05\). Recall the following sample size and statistic from earlier.

## [1] 2044## [1] 0.1492172We reformulate our question as a hypothesis test.

\[\begin{array}{cc} H_0: p = .1 \\ H_a: p > .1 \end{array} \]

We assume the observations in the gss2010 data set are independent. Before preceding with the hypothesis test, we need to make sure our sample size is sufficiently large so that we can use the Central Limit Theorem.

## [1] TRUE## [1] TRUESince the calculation above returned two TRUE’s we’re good to go! The CLT implies.

\[ \hat{p} \sim N \left(\text{mean} = .1, SE = \sqrt{\frac{.1\cdot.9}{2044}} \right)\]

We are performing a one-sided upper hypothesis test, so our \(p\)-value is

## [1] 5.983973e-14Since \(0 < .05 = \alpha\), we reject the null and accept the research. Our data provide sufficient evidence to believe that more than 10% of Americans in 2010 did not have a high school diploma.

This \(p\)-value is the same as the one we calculated in the previous section! This is no surprise: you can either think of this example as a check on simulation techniques or as the simulation techniques as a check on the central limit theorem.

6.2.2 Two sample proportion

We often want to compare the same measurement or parameter for two different populations. As usual, we almost never know any single population parameter, so the best we can do is take a sample from each population and compare the sample statistics. When analyzing a categorical variable, we end up comparing sample proportions to make inferences about population proportions.

To make this more precise, suppose you’re investigating some proportion for two populations.

Our population parameter of interest is \(p_1 - p2\)

Our sample statistic is \(\hat{p}_1- \hat{p}_2\)

To make an inference about the population parameter, we need to learn about the sampling distribution of \(\hat{p}_1- \hat{p}_2\). Fortunately, if we can apply the central limit theorem for each sample individually, a version of the central limit theorem applies again! The formula for the standard error looks more complicated, but note that the variance of the sampling distrubtion for \(\hat{p}_1- \hat{p}_2\) is just the sum of the variances for each each of the individual sampling distributions.

CLT for two sample proportions: Suppose you are analyzing the difference between two population proportions \(p_1\) and \(p_2\), take two samples of size \(n_1\) and \(n_2\) from each population respectively. If

the observations within each sample are independent from one another,

the observations between the samples are independent, and

each sample has at least ten expected or observed success and failures (ie \(n_ip_i \geq 10\) and \(n_i(1 - p_i) \geq 10\) for \(i= 1,2\))

Then the sampling distribution for \(\hat{p}_1- \hat{p}_2\) is approximately normally distributed. In particular,

\[ \hat{p}_1- \hat{p}_2 \sim N\left( \text{mean} = p_1 - p_2 \text{ and } SE = \sqrt{\frac{p_1(1-p_1)}{n_1}+ \frac{p_2(1-p_2)}{n_2}} \right) \]

The details on how we employ the CLT for two sample proportions changes depending on whether we’d like to calculate a confidence interval or perform a hypothesis test. As above, this difference arise because always assume the null hypothesis is true when performing a hypothesis test. The following subsections highlight exactly what these differences entail, but, briefly, it only changes the standard error calculation.

6.2.2.1 Confidence intervals

As a reminder, the general formula for a confidence interval is

\[\text{point est.} \pm (\text{crit. val.})\cdot SE \]

so to calculate and interpret a confidence interval for two sample proportions, we simply need to figure out each of these three pieces. We’ll do so by example using the cancer_in_dogs data set from the openintro R package.

This data set is the result of a 1994 study from 1994 designed to determine if exposure to the herbicide herbicide 2,4-Dichlorophenoxyacetic acid (2,4-D) increases dogs’ risk of developing cancer. Let’s calculate a 95% confidence interval estimating the difference in cancer rates between dogs exposed to the herbicide and those who are not.

There are 491 dogs in the data set who were exposed and 945 dogs that were not. The data set has two columns: order and response; the first indicates whether or not the dogs were exposed to the herbicide and the second indicates whether or not the dog developed cancer. Below we display 4 randomly selected rows from the dataset.

## # A tibble: 4 × 2

## order response

## <fct> <fct>

## 1 no 2,4-D no cancer

## 2 2,4-D cancer

## 3 2,4-D cancer

## 4 2,4-D cancerLet \(p_1\) denote the true proportion of dogs who are exposed to the herbicide and develop cancer; let \(p_2\) denote the proportion of dogs who are not exposed but still develop cancer. We need to determine our sample proportions \(\hat{p}_1\) and \(\hat{p}_2\). There are many ways to do this in R. A quick way is to use the table(...) function. Since the table only has two columns we can run the following to create a simple two-way table and store it in memory for future use.

| 2,4-D | no 2,4-D | |

|---|---|---|

| cancer | 191 | 300 |

| no cancer | 304 | 641 |

The columns in this two way table correspond to the samples of interest (ie the explanatory variable) and the rows the response variable. Thus, the denominator of our sample proportions will be the column sums and the numerators will be the entries in the cancer row.

n1 <- sum(sumTab[,1])

n2 <- sum(sumTab[,2])

pHat1 <- sumTab[1,1] / n1

pHat2 <- sumTab[1,2] / n2

c(pHat1, pHat2)## [1] 0.3858586 0.3188098When we calculate a confidence interval for two sample proportions, we’re estimating the difference between the two proportions, so our point estimate is

## [1] 0.06704881This means that roughly 6.7% more dogs exposed to the herbicide developed cancer in the sample data. Before we calculate a confidence interval, we need to make sure our samples meet the conditions needed to apply to Central Limit theorem.

Independence within samples and between samples. The study employed random sampling for both groups and each sample size is less than 10% of its corresponding population. Moreover, the samples were taken independently of one another. We’re thus good to go here.

Sample size requirements. From the two-way table

sumTababove we see that we have more than 10 successes (cancer) and failures (no cancer) in each group of dogs. Good to go here too!

The CLT applies, so the sampling distribution of \(\hat{p}_1 - \hat{p}_2\) is approximately normally distributed. Moreover, the standard error is

\[ SE \approx \sqrt{\frac{\hat{p}_1(1-\hat{p}_1)}{n_1}+ \frac{\hat{p}_2(1-\hat{p}_2)}{n_2}} \]

which we can now easily calculate!

## [1] 0.02663677This means that if we repeated the study over and over we should expect about changes of about 2.7% between the various differences in sample proportions. We’re finally ready to calculate our confidence interval! Since the sampling distribution is approximately normal, our the critical value for our 95% confidence interval is

and our confidence interval is

## [1] 0.01484171 0.11925591How should we interpret this confidence interval? Remember, we’re estimating the difference in cancer rates between dogs who were exposed to the herbicide and those who were not. Thus:

With 95% confidence, the cancer rate of dogs who are exposed to 2,4-Dichlorophenoxyacetic acid is between 1.5% and 11.9% higher than dogs who were not exposed to the herbicide.

The next section will go through hypothesis testing using the same data.

6.2.2.2 Hypothesis testing

Out in the wild you may want to simply check for a difference between two population proportions. The statistical inference for this is, of course, a hypothesis test. When you’re investigating a question like this your null and research hypotheses are

\[ \begin{array}{cl} H_0: & p_1 - p_2 = 0 \\ H_a: & p_1 - p_2 \neq 0. \end{array} \]

The alternative hypothesis can by one-sided if you’d like; if it were \(p_1 - p_2 > 0\) you’d be asking if the first proportion is greater than the second and the opposite if it were \(p_1 - p_2 < 0\). You’d could use a one sided hypothesis test to answer a question like “are there proportionally more Republicans in Montana than in Wyoming?”

As usual the CLT is the main tool for performing a 2-sample proportion hypothesis test. In this case we need to make sure that

our observations are independent within and between groups

each sample has at least 10 expected success and failures.

There’s a small difficulty we need to deal with here. Recall that we always assume the null hypothesis is true when performing a hypothesis test. In this case that only means \(p_1 = p_2\), but it doesn’t make any claim about what these proportions are actually equal to. The remedy is to estimate this value using a pooled proportion. Suppose your first and second samples have \(n_1\) and \(n_2\) observations respectively and \(suc_1\) and \(suc_2\) successes. The pooled sample proportion is

\[ \begin{array}{ccc} \hat{p}_{pool} & = & \frac{suc_1 + suc_2}{n_1 + n_2} \\ & = & \frac{ \hat{p}_1 n_1 + \hat{p}_2 n_2}{n_1 + n_2} \end{array} \] or, in words, the pooled proportion is the total number of successes in both samples divided by the total number of observations. We use \(\hat{p}_{pool}\) to assess the sample size requirements and in the formula for the standard error if our samples meet the conditions to apply the CLT. Let’s see how this plays out using the herbicide and cancer in dogs example from the last section. A two-way table summarizing the results of the experiment is below.

| 2,4-D | no 2,4-D | |

|---|---|---|

| cancer | 191 | 300 |

| no cancer | 304 | 641 |

The pooled proportion is the sum of the first row of this table divided by the sum of all entries in this table.

## [1] 0.341922In other words, if we ignored herbicide exposure about 34% of the dogs in our sample developed cancer. We use this proportion to ensure that our samples are both large enough to apply the central limit theorem.

## [1] 169.2514 325.7486## [1] 321.7486 619.2514Thus both of our samples have at least 10 expected success and expected failures, so we can apply the CLT! Remember we’re assuming that the null hypothesis is true and in turn that \[p_1 = p_2 \approx \hat{p}_{pool}\]. Thus

\[ \begin{array}{ccc} SE & = & \sqrt{\frac{p_1(1-p_1)}{n_1}+ \frac{p_2(1-p_2)}{n_2}} \\ & \approx & \sqrt{\frac{\hat{p}_{pool}(1-\hat{p}_{pool})}{n_1} + \frac{\hat{p}_{pool}(1-\hat{p}_{pool})}{n_2}} \end{array} \]

The calculation for our current working example is below.

## [1] 0.02633795We’re finally ready to calculate the \(p\)-value for this experiment. Let’s perform this hypothesis test with a significance level of \(\alpha = .05\).

Our point estimate of \(p_1 - p2\) is pDiff below.

## [1] 0.06704881The test statistic, which in this case is a \(Z\)-score, of our data is

\[ \begin{array}{ccc} Z & = & \frac{\text{point est.} - \text{null value}}{SE} \\ & = & \frac{ \hat{p}_1 - \hat{p}_2}{SE} \end{array} \]

or as follows in R.

## [1] 2.545711Since \(Z >0\) the \(p\)-value of our hypothesis test is

## [1] 0.01090555In other words, if there were no difference between cancer rates in dogs exposed to the herbicide and those who were not there would be a 1.1% chance of observing data like our own or more extreme.

Since \(p < \alpha = .05\), we reject the null and accept the research: The data provide compelling evidence to believe that there the cancer rate of dogs exposed to the herbicide is different from the rate of dogs who were not exposed.

Note that this may have been a good candidate for a one-sided hypothesis test since we’re not simply interested in detecting a difference. We may be more interested in seeing if dogs exposed to the herbicide developed cancer at greater rates than those who were not exposed. In this situation, it is common to perform a two-sided hypothesis test, but also report a confidence interval to indicate which side of the null value the true difference falls on if your tests results in a rejection of the null.

6.2.3 Chi-squared tests

We can get a lot of mileage making inferences about categorical variables using single and two sample proportion tests and confidence intervals, but they don’t do everything we need. A single proportion may not always capture the subtleties we’re interested in. For example, if you want to the test whether or not the outcomes of a die toss really are equally likely, it isn’t enough to check if a 1 occurs 1/6 of the time using a single sample proportion test because say 4’s could be less likely and 6’s more likely. Similarly, when we’re testing for an association between two categorical variables a 2-sample proportion test may miss some differences or subtleties we’re interested in. For instance, if you’re testing for an association between smoking and socio-economic status, it is possible that lower and upper class individuals smoke at the same rate, but that middle class people smoke at a different rate. We thus need to develop a tool that can capture these differences that our previous techniques miss. This is where chi-squared (or \(\chi^2\)) tests come in.

These tests are different from our the previous ones because you analyze counts instead of proportions. Counts are summarized in either one-way or two-way tables; one-way tables display the counts of the values a single categorical variable in a sample while two-way tables display the counts of the values of two categorical variables.

The data set sp500_seq from the openintro package is a summary of the all S&P500 trading day outcomes from 1950 to 2018. To get to the data set in question, first label each day as a down day or an up day, then count the number of days between two up days. The outcomes in this data set are 1, 2, 3, …, 7+ and we treat these values as an ordered categorical variables because of the 7+. Below we see a one-way table for the counts of lag times between up days. We will analyze this data set in the next section, but we will use it to test to see if the direction of the S&P500 on one day is independent from its direction on the previous day.

##

## 1 2 3 4 5 6 7+

## 1532 760 338 194 74 33 17For an example of a two-way table, we’ll use the smoking data set from the openintro package. This data set presents some survey data on smoking habits in the UK. You might wonder if there’s an association between marital status and smoking in the UK. This data set could provide some insight on this question and you could attempt to answer it by analyzing the following two-way table.

##

## Divorced Married Separated Single Widowed

## No 103 669 46 269 183

## Yes 58 143 22 158 40The basic idea behind all chi-squared tests is that under the null hypothesis each cell of your tabular data has an expected count; the goal is then is to measure the deviation between the observed counts and the expected counts. Moreover, we want a single test statistic that summarizes this.

You might start by simply looking at the difference between the observed \(O\) and expected \(E\) counts, then adding these differences up. Here we run into a dilemma that we’ve encountered before: positive and negative differences will possibly cancel each other out and make it look like there are no differences when in fact the differences are extreme. Instead, we square the difference between the observed and expected counts: \((O - E)^2\).

We still have a small dilemma. Suppose you have two cells with observed counts 11 and 101. The expected counts for these cells are 10 and 100 respectively; \((O- E)^2 = 1\) for both of these cells, but the first observed count is 10% different from the expected while the second is only 1% different from the expected. We thus need to measure the squared deviation from the expected counts relative to the expected counts in each cell. For each cell in our summary table, we calculate

\[ \frac{(O-E)^2}{E}\]

This value ends up being a squared \(Z\)-score! The single test statistic we calculate from our sample is the sum of these squared \(Z\)-scores and is called a \(\chi^2\)-test statistic (or chi-squared test statistic).

\[ \chi^2 = \sum_{all \, cells \\ in \, table } \frac{(O-E)^2}{E}\]

Since the test statistics are sums of squared \(Z\)-scores, the corresponding sampling distribution is a sum of squared standard normal distributions. The number of squared normal distributions is a parameter called degrees of freedom, \(df\). Thus you completely determine a chi-squared distribution with \(df\).

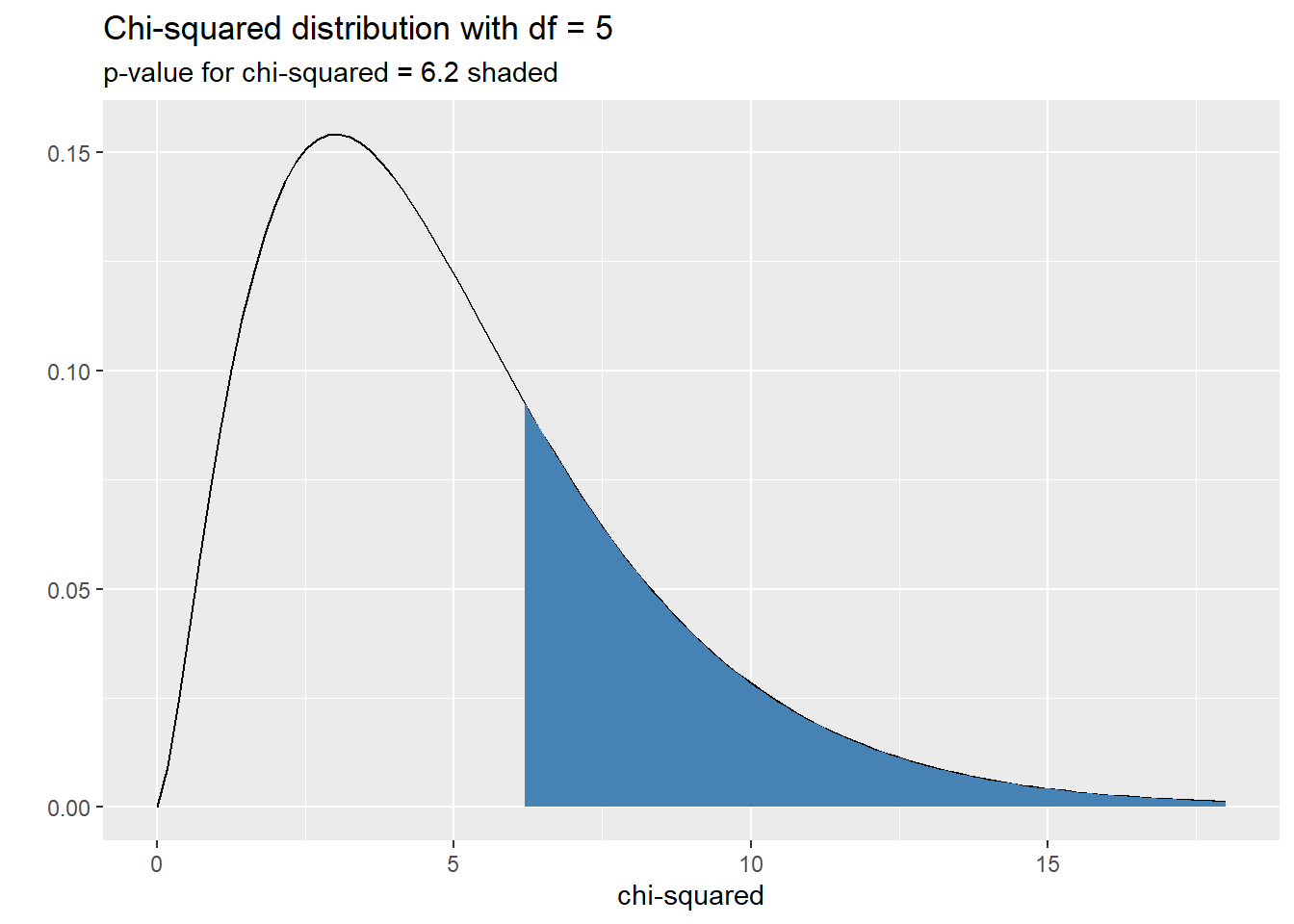

Once you’ve calculated your \(\chi^2\)-test statistic and have determined \(df\), you can calculate a \(p\)-value and execute your hypothesis test. For any chi-squared test, “more favorable for the alternative hypothesis” will always mean roughly more deviation from the expected counts, so \(p\)-values are always upper tail areas. For example, suppose you’re performing some hypothesis test and find

\[ \begin{array}{ccl} df & = & 5 \text{ and } \\ \chi^2 & = & 6.2. \end{array} \]

The image below shows a plot of the chi-squared distribtuion with \(df = 5\) and shades the \(p\)-value of our imaginary hypothesis test.

ggplot(tibble(x = c(0, 18)), aes(x = x)) +

geom_function(fun = dchisq,

args = list(df = 5)) +

stat_function(fun = dchisq,

args = list(df = 5),

geom = "area",

xlim = c(6.2, 18),

fill = "steelblue") +

labs( x = "chi-squared", y = "",

title = "Chi-squared distribution with df = 5",

subtitle = "p-value for chi-squared = 6.2 shaded")

From this we see that we can calculate \(p\)-values for chi-squared tests using the function pchisq(test_stat, df = df, lower.tail = FALSE). For example, the \(p\)-value for our imaginary hypothesis test would be

## [1] 0.2872417For more information about R’s functions for chi-squared distributions, see Chapter 4’s section on these distributions.

This section summarized the basics of all chi-squared tests from a big picture. From this summary note that we essentially only need to figure out two things.

How does one determine the expected counts for each cell of the table?

What are the appropriate numbers of degrees of freedom for the hypothesis test?

The answers to these questions depends on the type of chi-squared test you’re performing. The next sections provide these answers for the two types of chi-squared tests.

6.2.3.1 Chi-squared goodness of fit

These hypothesis tests are used to see if your sample data deviates from its expected distribution. For example, we expect the outcomes of a single die to be equally likely and could use a chi-squared goodness of fit test to check this.

The null and alternative hypothesis for a goodness of fit test are

\[ \begin{array}{cl} H_0 & \text{the data follow the expected distribution} H_a & \text{the data do not follow the expected distribution} \end{array} \]

and rejecting the hypothesis implies that the population distribution differs from the expected distribution.No surprise, the expected distribution determines the expected counts for each cell in your table. For example, if you toss a single die 120 times and record the outcome, you’d expect to get \(120/6 = 20\) of each number 1-6.

To perform these hypothesis tests we need to know the number of degrees of freedom. You can think of this as the minimum number of cell counts in your table you need to completely determine the remaining cells. For example, if you toss a die 120 times and know the counts of the outcomes 1 through 5, you can figure out how many 6’s you got (120 minus the sum of 1-5 counts). But if you removed any of the other counts, you wouldn’t have enough information to complete the table. Thus you have 5 degrees of freedom in the die toss example. For one-way tables and goodness of fit tests

\[ df = k -1\]

where \(k\) is the number of cells in your table.

Determining the expected counts can be slightly more involved than the die toss example. Let’s return to the S&P500 example mentioned in the previous section. We want to test to see if the direction of the stock market on one day is independent from the direction of the stock market on the previous day. If we assume that the directions are independent, then the probability of the market going up on any day is the same. This might be tough to test on its own, but notice that we can count the number of days between two consecutive up days. For instance, “up up” would give a count of one, “up down up” a count of two, etc. The table below tallies all such runs from 1990 to 2011.

##

## 1 2 3 4 5 6 7+

## 1532 760 338 194 74 33 17If the daily directions are independent, what would the table above look like? The number of days between consecutive “up” days should follow a geometric distribution! To determine a geometric distribution, we need to know the probability of a success, which is the probability that the S&P500 goes up on a given day. We can estimate this probability using the following code chunk looks at the raw S&P500 data from 1990 to 2011.

sp500_1950_2018 %>%

filter( as.character(Date) >= '1990' &

as.character(Date) <= '2012') %>%

mutate(dir = if_else( Close > Open, TRUE, FALSE)) %>%

summarize( upProb = sum(dir)/length(dir))## # A tibble: 1 × 1

## upProb

## <dbl>

## 1 0.531So the probability that the S&P500 goes up on a given day is rough 53.1%. Using this, we can generate a table of expected counts.

# total number of up-to-up runs

n <-sum(spTable)

#list of factors

days <- c(1:6, "7+")

#probability/proportion of each factor level

probs <- c( dgeom(0:5,.531), pgeom(5, .531, lower.tail = F))

# expected counts

expected <- n*probs

expected## [1] 1565.38800 734.16697 344.32431 161.48810 75.73792 35.52108 31.37361# display all of the above in a horizontally organized table

t( tibble(days, prob = round(probs, 3), expected = round(expected, 3))) %>% kable()| days | 1 | 2 | 3 | 4 | 5 | 6 | 7+ |

| prob | 0.531 | 0.249 | 0.117 | 0.055 | 0.026 | 0.012 | 0.011 |

| expected | 1565.388 | 734.167 | 344.324 | 161.488 | 75.738 | 35.521 | 31.374 |

We’re finally ready to calculate our test statistic and execute a hypothesis test. Let’s use a significance level of \(\alpha = .05\). We’re specifically testing

\[ \begin{array}{cl} H_0: & \text{the number of days between consecutive "up" days} \\ & \text{on the S&P500 follows a geometric distribution} \\ H_a: & \text{the count does not follow a geometric distribution.} \end{array} \]

Note that our table has 7 cells in it, so

\[ df = 7 - 1 = 6. \]

We will calculate our test statistic in a few steps. Recall that it is

\[ \chi^2 = \sum_{all \\cells} \frac{(O-E)^2}{E} \]

##

## 1 2 3 4 5 6 7+

## 0.71212923 0.90898305 0.11616053 6.54551974 0.03987916 0.17893220 6.58517561## [1] 15.08678Now that we have our test statistic in hand we can calculate a \(p\)-value.

## [1] 0.0195924Since our \(p\)-value is less than .05, we reject the null and accept the alternative. In other words, the counts of runs of different lengths do not appear to follow a geometric distribution. This implies that the direction of the S&P500 on one day depends on the direction of the previous day.

This seems like it could be useful information! But how could one use it? This is a harder question and for the most part beyond the scope of this course. For fun, let’s look at the z-scores of each cell instead of the squared z-scores.

##

## 1 2 3 4 5 6 7+

## -0.8438775 0.9534060 -0.3408233 2.5584213 -0.1996977 -0.4230038 -2.5661597The only two run lengths that seem particularly unusual are runs of length 4 and 7 or more. In particular there are more runs of length 4 than we’d expect and fewer runs of length seven or more. Does that mean you should bet on the market going up if there are three down days in a row? Not without more analysis! At a bare minimum you’d want to calculate a conditional probability: what’s the probability the market goes up after three down days?

Note: This is, of course, far from anything related to actual financial advice! This example is merely to demonstrate one of the myriad of things you can investigate with goodness of fit tests.

6.2.3.2 Chi-squared test of independence

These tests are used to check for an association between two categorical variables. Because of this you will likely see chi-squared tests of independence all over the place. For example, the results of clinical trials in medicine are often analyzed using chi-squared tests of independence since you have two groups of individuals (those in the treatment group and those in the placebo group) and there are often two or more outcomes (symptoms improve, symptoms don’t improve, etc.). Recall from above that one applies these tests to two-way tables. We’ll work through the example testing for an association between marital status and smoking in the United Kingdom (the data comes from the smoking data set from the openintro package). The observed counts from a survey are displayed below.

##

## Divorced Married Separated Single Widowed

## No 103 669 46 269 183

## Yes 58 143 22 158 40The null and research hypotheses for a test of independence are

\[ \begin{array}{cl} H_0: & \text{the variables are independent} \\ H_a: & \text{the variables are dependent} \end{array} \]

For our example, the null hypothesis is that smoking and marital status are not associated; the research hypothesis is that there is an association between smoking and marital status.

As with goodness of fit tests, we need to determine the expected counts for our two-way table assuming the null hypothesis is true. Recall from probability that if two events \(A\) and \(B\) are independent, then \(P(A \& B) = P(A)P(B)\) (caution! This is only true when the events are independent). Suppose event \(A\) corresponds to row \(i\) in your two way table and event \(B\) to column \(j\). Let \(n\) denote the sum of all entries in your table; \(n\) is sometimes called the grand total. Then

\[ P(A) = \frac{\text{row i total }}{n} \quad \text{and} \quad P(B) = \frac{\text{column j total }}{n} \]

so if \(A\) and \(B\) are independent

\[ P( A \& B ) = \frac{\text{row i total }}{n} \frac{\text{column j total }}{n} = \frac{\text{row i total}\cdot \text{column j total }}{n^2} \]

Thus the expected count in the \((i,j)\)-cell of our two-way table is

\[ n \cdot P( A \& B ) = \frac{\text{row i total}\cdot \text{column j total }}{n} \]

To make this more concrete, let’s find the expected count for the \((1,1)\)-cell in our observed two-way table above. This cell corresponds to divorced people who do not smoke. The code chunk below calculates the corresponding row and column totals along with the grand total.

## [1] 1691 1270 161So if the smoking and marital status are independent, we’d expect to see

## [1] 120.9166divorced non-smokers in our survey results. The squared \(z\)-score for this cell would then be

## [1] 2.654765Now let’s make a table of expected counts. In practice, you’d use one of R’s in-built functions to perform this test, but here we’re demonstrating what’s going on “beneath the hood.” We use two nested “for” loops to make the table of expected counts.

# define an matrix with the right dimensions of all zeroes

expected <- matrix( rep(0, prod(dim(observed))),

nrow = dim(observed)[1],

ncol = dim(observed)[2])

# iterate over all rows

for(i in 1:dim(observed)[1]){

#iterage over all columns

for(j in 1:dim(observed)[2]){

#update i,j-th entry with expected count

expected[i,j] <- sum(observed[i,])*sum(observed[,j])/sum(observed)

}

}

expected## [,1] [,2] [,3] [,4] [,5]

## [1,] 120.91662 609.8403 51.07037 320.6919 167.48078

## [2,] 40.08338 202.1597 16.92963 106.3081 55.51922Is it a problem that none of the entries in the table above are integers? No! We should think of the expected counts as average outcomes.

We can now easily calculate our table of squared \(z\)-scores and our chi-squared test statistic. After that, to perform out hypothesis test, we simply need to determine the appropriate number of degrees of freedom \(df\).

##

## Divorced Married Separated Single Widowed

## No 2.6547648 5.7389881 0.5033971 8.3321480 1.4380526

## Yes 8.0084354 17.3123870 1.5185614 25.1349832 4.3380684The chi-squared test statistic is the sum of the entries in the table above.

## [1] 74.97979To calculate a \(p\)-value and perform this hypothesis test, we simply need to determine \(df\). As with goodness of fit tests, \(df\) is the minimum number of cell counts in your table you need to completely determine the remaining cells. If \(R\) and \(C\) are the number of rows and columns in your two-way table, then

\[ df = (R-1)\times (C-1) \]

so for our example

\[ df = (2-1)\times (5 -1 ) = 4. \]

Thus the \(p\)-value for our hypothesis test is

## [1] 2.012302e-15This \(p\)-value is smaller than all typical significance levels, so we reject the null and accept the research. In particular, the data provide compelling evidence to believe that there is an association between marital status and smoking. What might this association look like? Let’s look at the \(z\)-scores of our table counts instead of the squared \(z\)-scores.

##

## Divorced Married Separated Single Widowed

## No -1.6293449 2.3956185 -0.7095048 -2.8865460 1.1991883

## Yes 2.8299179 -4.1608157 1.2322992 5.0134801 -2.0828030It appears that married people are way less likely to smoke and single people are way more likely to smoke than expected. As in the previous example for goodness of fit, we could do a more in-depth analysis to describe the association we detected.

6.3 Analyzing numerical variables

We’ve finished our discussion of analyzing categorical variables and now turn our attention to analyzing numerical variables. There are many different numerical variables one can analyze but we will focus on making inferences about population averages. We use the Greek letter mu \(\mu\) to denote a population average and \(\overline{x}\) to denote a sample average. Similarly, we use the Greek letter \(\sigma\) to denote the population standard deviation and \(s\) to denote sample standard deviations. We will use sample averages to make inferences about population averages, but in the process we will have to estimate \(\sigma\) using \(s\). This creates a small dilemma that we’ll define and address below.

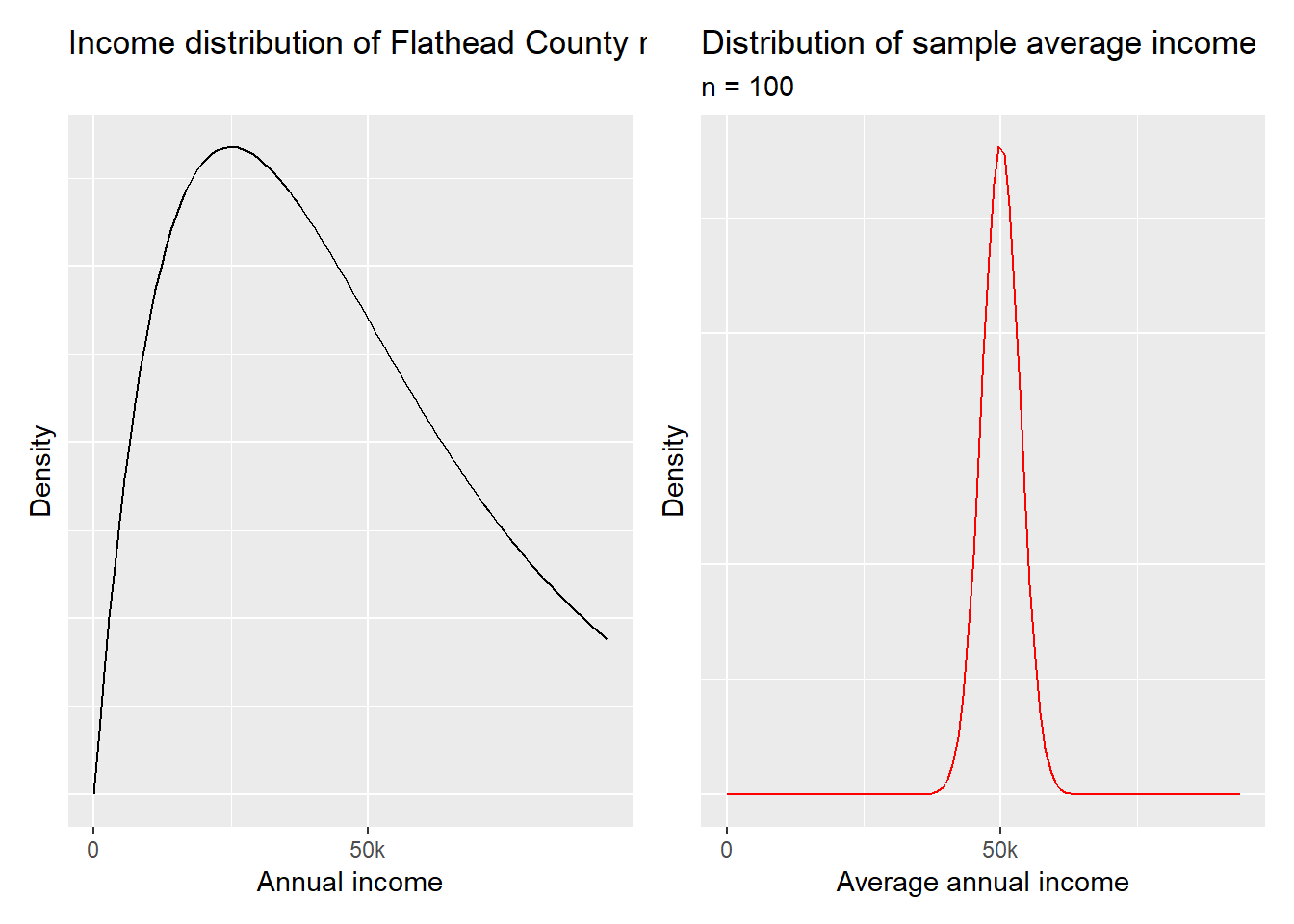

One distinction that becomes more important and sometimes difficult to make when analyzing numerical variables is the difference between population distributions and sampling distributions. This was an easy distinction to make when we were dealing with categorical variables, because the population distributions were discrete things (proportion of success and proportion of failure), but sampling distributions were continuous. When dealing with numerical variables, however, the population and sampling distributions are often both continuous.

As an example, suppose you are trying to investigate the average income of residents in Flathead County. You take a sample of 100 adult county residents. You sample from a population and then use the sampling distribution of \(\overline{x}\) to make an inference about the county average population. The plot below helps us visualize the difference between these two distributions. The distributions below are made up and serve merely to illustrate the differences between the two distributions.

The plots above demonstrate that population distributions can be skewed (or in practice have a totally unknown shape), but under fairly mild hypotheses, the sampling distribution of \(\overline{x}\) will be symmetric and unimodal. This is the central limit theorem in action again. As with categorical variables, this will be the main driver of inference about numerical variables for us.

Central Limit Theorem (for a single sample average): Suppose we have \(n\) independent observations of a numerical variable. If either

the population we’re sampling from is approximately normal or

\(n\) is sufficiently large or

then the sampling distribution of \(\overline{x}\) is approximately normal with

\[ E(\overline{x}) = \mu \quad \text{and} \quad SE( \overline{x}) = \frac{\sigma}{\sqrt{n}}\]

No surprise, the standard error of the sampling distribution of \(\overline{x}\) depends on the standard deviation of the population. Unfortunately, we almost never know \(\sigma\) and almost never know if the population we’re sampling from is approximately normally distributed. For the latter dilemma we have a few rules of thumb to guide us.

Normality conditions:

\(n <30\) and there are no clear outliers in your data, it is common to assume your sample comes from an approximately normal population. Note that this is simply a rule of thumb! To check for outliers, use \(z\)-scores and look for the maximum in absolute value.

\(n \geq 30\) and there are no extreme outliers in your data.

If either of these conditions holds and your observations are independent, then the we assume our sample meets the criteria in order to apply the CLT as described above. There is, however, one more small obstacle: we almost never know \(\sigma\), the population standard deviation, which determines the standard error of \(\overline{x}\). We do what appears to be the most logical thing, and estimate \(\sigma\) with \(s\), our sample standard deviation so that

\[ SE = \frac{\sigma}{\sqrt{m}} \approx \frac{s}{\sqrt{n}}\]

but (because of technical reasons that we’ll skip) it turns our that \(s\) is a biased estimator of \(\sigma\). Moreover, it consistently underestimates \(sigma\). Thus, our estimate of \(SE\) above is also an underestimate, which means that we should expect more variability among our sample statistics. This bias is especially pronounced when your sample size is small, and tends to zero as the sample size increases. The key to remedying this problem, at least for our purposes, is to model the sampling distribution of \(\overline{x}\) using \(t\)-distribution.

Recall from Chapter 4 that a \(t\)-distribution is a symmetric and unimodal distribution determined by a single parameter, \(df\), or degrees of freedom. Each \(t\)-distribution has “fatter tails” than the standard normal distribution, meaning that a higher proportion of observations will fall more than 2 standard deviations from the mean. As \(df\) gets larger, \(t\)-distributions approach the standard normal distribution, so the fat tails get skinnier. Notice that this helps solve the dilemma of using \(s\) to estimate \(\sigma\)!

In the next sections we’ll see how to use \(t\)-distributions to make inferences about population averages. Everything in the following two sections should feel very similar from a big picture perspective because the overall strategy for statistical inference remains the same.

6.3.1 Single sample mean

We begin our exploration of numerical variables by learning how to make inferences about a single population average. We will see how to calculate and interpret confidence intervals, then proceed to hypothesis testing.

To illustrate the techniques, we will use two examples in what follows. One example will be based on the faithful data set that is loaded by default in R. It gives the duration of 272 eruptions at Old Faithful along with the wait time between eruptions. We will also look the women data set which gives the height and weight of 15 American women from 1975. For both examples we will need to verify that we can apply the Central Limit Theorem, so we need to to check the normality conditions listed above.

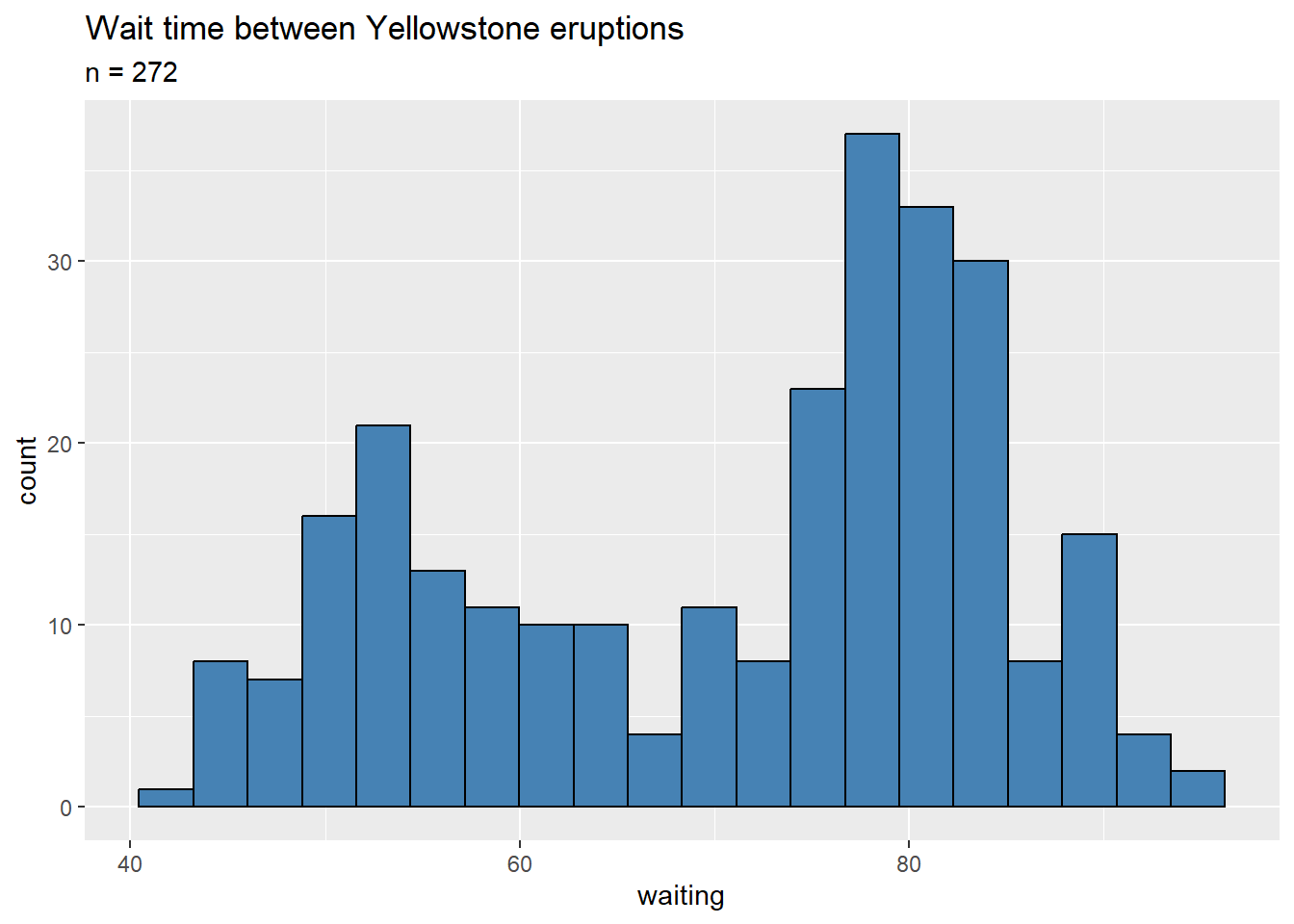

faithful: We’ll investigate the wait time between eruptions, the waiting variable in the faithful data set. Since the number of observations \(n = 272\), we simply need to check that the data set has no extreme outliers. We can do this in two ways, visually and numerically. First, we’ll look at a histogram of the data.

ggplot(faithful, aes(x = waiting)) +

geom_histogram(color = "black"

, fill = "steelblue"

, bins = 20) +

labs( title = "Wait time between Yellowstone eruptions"

, subtitle = "n = 272")

The wait time between eruptions appears to be bimodal, but this is no problem for us. From the histogram above, no observations seem particularly extreme. Let’s double check this with some calculations. We can look at a summary of the wait times:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 43.0 58.0 76.0 70.9 82.0 96.0or translate this summary into \(z\)-scores

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## -2.0520 -0.9487 0.3754 0.0000 0.8167 1.8465These calculations confirm our observation: since no measurement is more than roughly 2 standard deviations away from the mean, our sample contains no outliers.

We also need to check independence of observations here. Does the wait time from one eruption to another influence the wait time until the next eruption? Quite possibly, but without more information it is hard to say. To keep things simple, we point out that independence is difficult to verify in this case, but we assume the observations are independent.

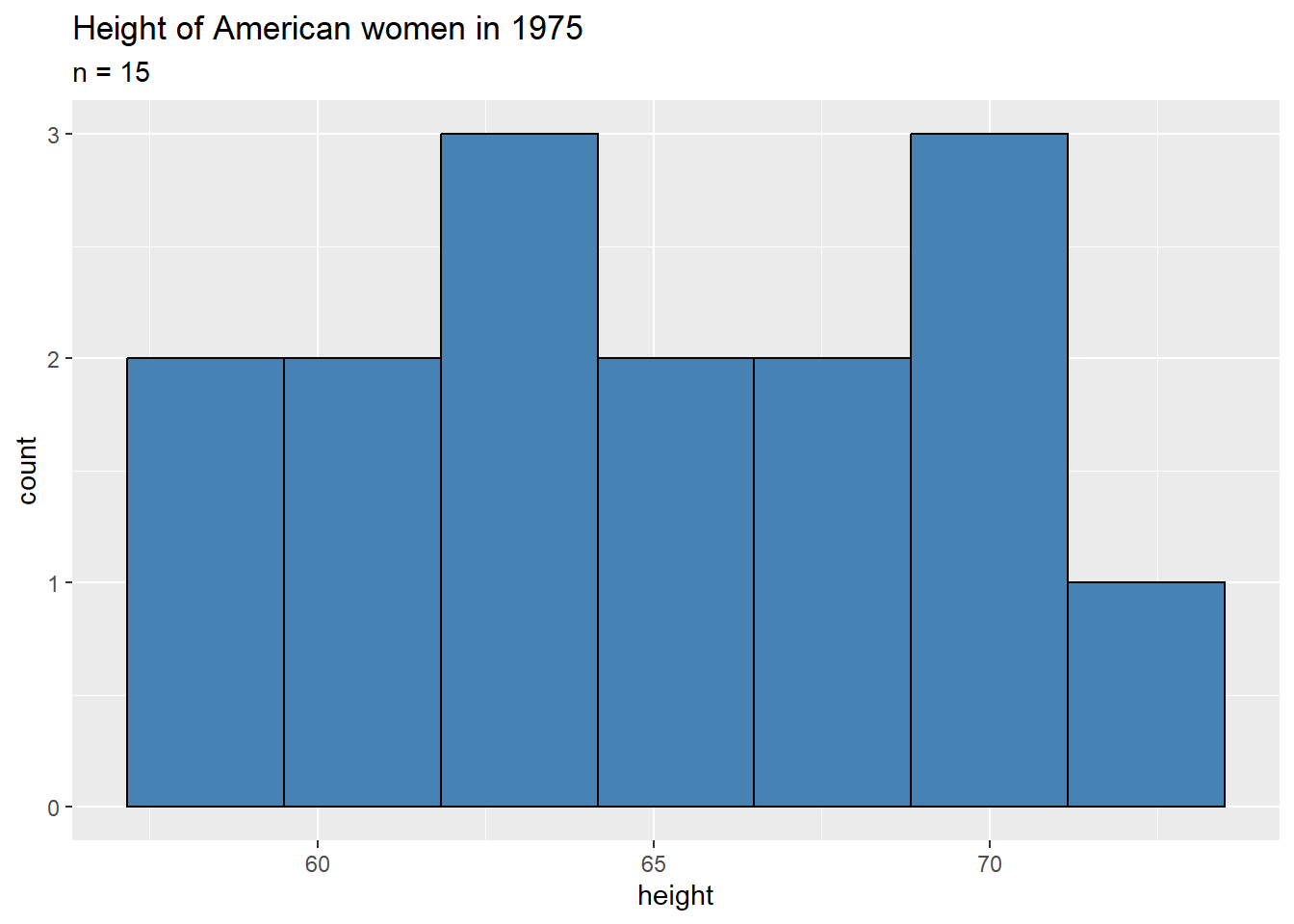

women: We’ll look at the height variable. In this case the number of observations is small as \(n = 15\). But not all is lost! If we can safely assume the population is normally distributed, we can apply the central limit theorem. As above, we first look at a histogram

ggplot(women, aes(x = height)) +

geom_histogram(color = "black"

, fill = "steelblue"

, bins = 7) +

labs( title = "Height of American women in 1975"

, subtitle = "n = 15")

No surprise, the distribution of heights in this sample is fairly uniform and there are no clear outliers in the data. We’ll check this using a summary and \(z\)-scores again.

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 58.0 61.5 65.0 65.0 68.5 72.0and \(z\)-scores:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## -1.5652 -0.7826 0.0000 0.0000 0.7826 1.5652Since all observations are less than 2 standard deviations from the mean, there are no outliers whatsoever in this data set. In this case it is safe to assume that the population is normally distributed, which means that we can apply the central limit theorem to this example as well!

We will use the CLT to estimate the population averages and also make inferences about them. Before we can proceed, we need to establish one more fact. Recall from above that we will model the sampling distribution of \(\overline{x}\) with a \(t\)-distribution. Unlike normal distributions that are determined by a mean \(\mu\) and a standard deviation \(\sigma\), a \(t\)-distribution is determined by a single parameter, degrees of freedom \(df\).

If your sample has \(n\) measurements, then the sampling distribution is best modeled by a \(t\)-distribution with \[ df = n - 1\]

6.3.1.1 Confidence intervals

Confidence intervals for single sample means are calculated using the typical pattern:

\[ \text{point est.} \pm \text{critical value}\cdot SE \]

where the point estimate is the sample average \(\overline{x}\) and the standard error \(SE\) is given by the CLT \(SE = \sigma / \sqrt{n} \approx s / \sqrt{n}\). If your sample meets the criteria for applying the central limit theorem, the only new datum we need to calculate is the critical value. Since the sampling distribution of \(\overline{x}\) is best modeled with a \(t\)-distribution with \(df = n-1\), we should use this distribution to find the critical value.

You can calculate these \(t\)-critical values using the R function qt in the exact same way you found \(z\)-critical values in the previous section! In particular, if calculating an \(\alpha\)% confidence interval, the critical value is \(t^\star_{df} =\) qt( (1-alpha)/2, df = n-1, lower.tail = FALSE ) where alpha is the confidence level as a decimal rather than a percentage.

Let’s now calculate and interpret 95% confidence intervals for the examples discussed in the section above, women and faithful.

faithful: We want to calculate a 95% confidence interval for the average wait time between eruptions at the Old Faithful geyser in Yellowstone (in minutes). To do so, we need four numbers: \(\overline{x}\) the sample average, \(n\) the sample size, \(s\) the sample standard deviation, and \(t^\star_df\) the \(t\)-critical value. The code chunk below calculates all of these numbers along with the standard error, then prints the \(t\)-critical value.

n <- length(faithful$waiting)

xBar <- mean(faithful$waiting)

s <- sd(faithful$waiting)

SE <- s / sqrt(n)

tStar <- qt( (1 - .95)/2, df = n-1, lower.tail = FALSE)

tStar## [1] 1.968756Notice that \(t^\star_df\) is very close to the \(z\)-critical value for a 95% confidence interval. This is because 272 is large, so the associated \(t\)-distribution is very close to the standard normal curve.

Now we can combine the above into a confidence interval.

## [1] 69.27418 72.51994Thus, with 95% confidence, the average wait time between two eruptions at Old Faithful is between roughly 69.3 and 72.5 minutes.

women: We want to calculate a 95% confidence interval for the average height of American women in 1975. We need the same numbers as above to make this calculation and the code chunk below does exactly that.

n <- length(women$height)

xBar <- mean(women$height)

s <- sd(women$height)

SE <- s / sqrt(n)

tStar <- qt( (1 - .95)/2, df = n-1, lower.tail = FALSE)

tStar## [1] 2.144787This time around, notice that \(t^\star_df \approx 2.144\) which is larger than the \(z\)-critical value for a 95% confidence interval. This is because the \(t\)-distribution with \(14\) degrees of freedom has fatter tails than the standard normal distribution. This is exactly what we want because we are more uncertain about our use of \(s\), the sample standard deviation, as an estimate of \(\sigma\), the population standard deviation.

Now, calculating the confidence interval:

## [1] 62.52341 67.47659Our calculations imply that the average height of American women in 1975 was between about 62.52 and 67.48 inches, with 95% confidence.

6.3.1.2 Hypothesis testing

Now that we have confidence intervals under our belt and understand the details of using \(t\)-distributions for making inferences about means, we can move through hypothesis testing fairly quickly. When making inferences about an average, the null and research hypotheses are statements about the value of the true population mean.

\[ \begin{array}{cc} H_0: & \mu = \mu_0 \\ H_a: & \mu \neq \mu_0 \text{ or } \mu > \mu_0 \text{ or } \mu < \mu_0 \end{array} \]

Remember that one assumes the null hypothesis is true when performing a hypothesis test. Note: unlike analyzing proportions, the standard error depends only on your sample and not the null mean! This is because \[ SE = \frac{\sigma}{\sqrt{n}} \approx \frac{s}{\sqrt{n}} \] whenever we can apply the Central Limit Theorem.

To execute this hypothesis test, we perform the usual steps.

Set a significance level \(\alpha\)

Calculate all appropriate sample statistics. In this case the sample average \(\overline{x}\) and standard deviation \(s\).

Calculate the standard error \(SE\).

Calculate your test statistic. In this case, it is called a \(T\)-statistic since the sampling distribution is best modeled with a \(t\)-distribution with \(df = n-1\). These statistics are the same as \(z\)-scores.

\[ T = \frac{\overline{x} - \mu_0}{SE} \]

Note that this is where we’re using the null hypothesis! The \(T\)-statistic measures how far our sample average is from the null average in terms of standard error.

Calculate the \(p\)-value of your test statistic. This depends on the type of test you’re performing. In this case, \(p\)-value calculations are exactly like they are for single sample proportions, except you use the function

ptin R instead ofpnorm.Compare your \(p\)-value to the significance level \(\alpha\) and make a conclusion.

We’ll use the two data sets above to go through two different hypothesis test examples.

women: As usual, we start with a question: “Were American women taller in 1975 than they were in 2022?” This question is actually a bit more complicated than it seems at first pass since the category “adult” spans a wide range of ages and average height varies with time. Our data set women looks at the average height of women aged between 30 and 39 in 1975, so really we should ask “Were American women aged 30-39 taller in 1975 than they were in 2022?”. We’ll use a significance level of \(\alpha = .1\).

Pulling data from Our World in Data, the average height of an woman aged between 30 and 39 in 2022 was roughly 64.4 inches. If \(\mu\) denotes the average height of women in 1975, then we set up our hypothesis test as

\[\begin{array}{cc} H_0: & \mu = 64.4 \\ H_1 & \mu > 64.4 \end{array}\]

The our sample mean, standard deviation, sample size, and standard error are calculated below.

n <- length(women$height)

xBar <- mean(women$height)

s <- sd(women$height)

SE <- s / sqrt(n)

#printing the calculated values

c(n = n, xBar = xBar, s = s, SE = SE)## n xBar s SE